Difference between revisions of "Building Blocks"

| (2 intermediate revisions by one other user not shown) | |||

| Line 172: | Line 172: | ||

| style="background-color:#92D050;" | Implemented and running | | style="background-color:#92D050;" | Implemented and running | ||

| style="background-color:#00B050;" | Completely aligned with current EU policies | | style="background-color:#00B050;" | Completely aligned with current EU policies | ||

| − | |Piloted | + | | style="background-color:#FFFF00;" | Piloted |

| style="background-color:#92D050;" | Acceptable | | style="background-color:#92D050;" | Acceptable | ||

|- | |- | ||

| Line 201: | Line 201: | ||

| style="background-color:#00B050;" | Completely aligned with current EU policies | | style="background-color:#00B050;" | Completely aligned with current EU policies | ||

| style="background-color:#00B050;" | EU infrastructure, broadly accepted | | style="background-color:#00B050;" | EU infrastructure, broadly accepted | ||

| − | |Discarded | + | | style="background-color:#FF0000;" | Discarded |

|- | |- | ||

|9 | |9 | ||

| Line 208: | Line 208: | ||

| style="background-color:#92D050;" | Aligned with national policies, but is yet to be aligned with the EU | | style="background-color:#92D050;" | Aligned with national policies, but is yet to be aligned with the EU | ||

| style="background-color:#92D050;" | runs in production in EU (one or more MS) | | style="background-color:#92D050;" | runs in production in EU (one or more MS) | ||

| − | |Useful | + | | style="background-color:#FFFF00;" | Useful |

|- | |- | ||

|10 | |10 | ||

| Line 219: | Line 219: | ||

|11 | |11 | ||

|BREG-DCAT-AP | |BREG-DCAT-AP | ||

| − | |Not stable/ under development | + | | style="background-color:#FFFF00;" | Not stable/ under development |

| − | |Acceptable, but subject to improvement | + | | style="background-color:#FFFF00;" | Acceptable, but subject to improvement |

| − | |Piloted | + | | style="background-color:#FFFF00;" | Piloted |

| style="background-color:#92D050;" | Acceptable | | style="background-color:#92D050;" | Acceptable | ||

|- | |- | ||

|12 | |12 | ||

|ISA2 Multilingual Forms | |ISA2 Multilingual Forms | ||

| − | |Implemented and running | + | | style="background-color:#92D050;" | Implemented and running |

| style="background-color:#00B050;" | Completely aligned with current EU policies | | style="background-color:#00B050;" | Completely aligned with current EU policies | ||

| − | |runs in production in EU (one or more MS) | + | | style="background-color:#92D050;" | runs in production in EU (one or more MS) |

| − | |Acceptable | + | | style="background-color:#92D050;" | Acceptable |

|- | |- | ||

|13 | |13 | ||

|EBSI (CEF Blockchain) | |EBSI (CEF Blockchain) | ||

| − | |Not stable/ under development | + | | style="background-color:#FFFF00;" | Not stable/ under development |

| − | |Acceptable, but subject to improvement | + | | style="background-color:#FFFF00;" | Acceptable, but subject to improvement |

| − | |Piloted | + | | style="background-color:#FFFF00;" | Piloted |

| − | |Useful | + | | style="background-color:#FFFF00;" | Useful |

|- | |- | ||

|14 | |14 | ||

| Line 254: | Line 254: | ||

|16 | |16 | ||

|SEMPER | |SEMPER | ||

| − | |Not stable/ under development | + | | style="background-color:#FFFF00;" | Not stable/ under development |

| − | |Acceptable, but subject to improvement | + | | style="background-color:#FFFF00;" | Acceptable, but subject to improvement |

| − | |Piloted | + | | style="background-color:#FFFF00;" | Piloted |

| style="background-color:#00B050;" | Recommended | | style="background-color:#00B050;" | Recommended | ||

|- | |- | ||

| Line 264: | Line 264: | ||

| style="background-color:#00B050;" | Completely aligned with current EU policies | | style="background-color:#00B050;" | Completely aligned with current EU policies | ||

| style="background-color:#00B050;" | EU infrastructure, broadly accepted | | style="background-color:#00B050;" | EU infrastructure, broadly accepted | ||

| − | |Discarded | + | | style="background-color:#FF0000;" | Discarded |

|- | |- | ||

|18 | |18 | ||

| Line 270: | Line 270: | ||

| style="background-color:#92D050;" | Implemented and running | | style="background-color:#92D050;" | Implemented and running | ||

| style="background-color:#92D050;" | Aligned with national policies, but is yet to be aligned with the EU | | style="background-color:#92D050;" | Aligned with national policies, but is yet to be aligned with the EU | ||

| − | |Piloted | + | | style="background-color:#FFFF00;" | Piloted |

| style="background-color:#00B050;" | Recommended | | style="background-color:#00B050;" | Recommended | ||

|- | |- | ||

|19 | |19 | ||

|TOOP CERB | |TOOP CERB | ||

| − | |Not stable/ under development | + | | style="background-color:#FFFF00;" | Not stable/ under development |

| − | |Acceptable, but subject to improvement | + | | style="background-color:#FFFF00;" | Acceptable, but subject to improvement |

| − | |Piloted | + | | style="background-color:#FFFF00;" | Piloted |

| − | |Useful | + | | style="background-color:#FFFF00;" | Useful |

|- | |- | ||

|20 | |20 | ||

|TOOP DSD | |TOOP DSD | ||

| − | |Not stable/ under development | + | | style="background-color:#FFFF00;" | Not stable/ under development |

| − | |Acceptable, but subject to improvement | + | | style="background-color:#FFFF00;" | Acceptable, but subject to improvement |

| − | |Piloted | + | | style="background-color:#FFFF00;" | Piloted |

| style="background-color:#92D050;" | Acceptable | | style="background-color:#92D050;" | Acceptable | ||

|- | |- | ||

| Line 296: | Line 296: | ||

|22 | |22 | ||

|TOOP EDM | |TOOP EDM | ||

| − | |Not stable/ under development | + | | style="background-color:#FFFF00;" | Not stable/ under development |

| − | |Acceptable, but subject to improvement | + | | style="background-color:#FFFF00;" | Acceptable, but subject to improvement |

| − | |Concept | + | | style="background-color:#FF0000;" | Concept |

| style="background-color:#92D050;" | Acceptable | | style="background-color:#92D050;" | Acceptable | ||

|- | |- | ||

| Line 305: | Line 305: | ||

| style="background-color:#92D050;" | Implemented and running | | style="background-color:#92D050;" | Implemented and running | ||

| style="background-color:#92D050;" | Aligned with national policies, but is yet to be aligned with the EU | | style="background-color:#92D050;" | Aligned with national policies, but is yet to be aligned with the EU | ||

| − | |Piloted | + | | style="background-color:#FFFF00;" | Piloted |

| style="background-color:#00B050;" | Recommended | | style="background-color:#00B050;" | Recommended | ||

|- | |- | ||

| Line 317: | Line 317: | ||

|25 | |25 | ||

|TOOP Testing Tool | |TOOP Testing Tool | ||

| − | |Not stable/ under development | + | | style="background-color:#FFFF00;" | Not stable/ under development |

| style="background-color:#92D050;" | Acceptable, but subject to improvement | | style="background-color:#92D050;" | Acceptable, but subject to improvement | ||

| − | |Piloted | + | | style="background-color:#FFFF00;" | Piloted |

| style="background-color:#92D050;" | Acceptable | | style="background-color:#92D050;" | Acceptable | ||

|- | |- | ||

| Line 327: | Line 327: | ||

| style="background-color:#00B050;" | Completely aligned with current EU policies | | style="background-color:#00B050;" | Completely aligned with current EU policies | ||

| style="background-color:#00B050;" | EU infrastructure, broadly accepted | | style="background-color:#00B050;" | EU infrastructure, broadly accepted | ||

| − | |Useful | + | | style="background-color:#FFFF00;" | Useful |

|- | |- | ||

|27 | |27 | ||

|EDCI | |EDCI | ||

| − | |Not stable/ under development | + | | style="background-color:#FFFF00;" | Not stable/ under development |

| − | |Acceptable, but subject to improvement | + | | style="background-color:#FFFF00;" | Acceptable, but subject to improvement |

| − | |Piloted | + | | style="background-color:#FFFF00;" | Piloted |

| − | | | + | | style="background-color:#FFFF00;" | Useful |

| − | |||

| − | |||

| − | Useful | ||

|- | |- | ||

|28 | |28 | ||

|EES | |EES | ||

| − | |Not stable/ under development | + | | style="background-color:#FFFF00;" | Not stable/ under development |

| style="background-color:#92D050;" | Aligned with national policies, yet to be aligned with the EU | | style="background-color:#92D050;" | Aligned with national policies, yet to be aligned with the EU | ||

| − | |Concept | + | | style="background-color:#FF0000;" | Concept |

| − | |Useful | + | | style="background-color:#FFFF00;" | Useful |

|- | |- | ||

|29 | |29 | ||

|eTimeStamp | |eTimeStamp | ||

| − | |Not stable/ under development | + | | style="background-color:#FFFF00;" | Not stable/ under development |

| style="background-color:#00B050;" | Completely aligned with current EU policies | | style="background-color:#00B050;" | Completely aligned with current EU policies | ||

| − | |Concept | + | | style="background-color:#FF0000;" | Concept |

| style="background-color:#00B050;" | Recommended | | style="background-color:#00B050;" | Recommended | ||

|- | |- | ||

|30 | |30 | ||

|eDocument | |eDocument | ||

| − | |Not stable/ under development | + | | style="background-color:#FFFF00;" | Not stable/ under development |

| − | |Acceptable, but subject to improvement | + | | style="background-color:#FFFF00;" | Acceptable, but subject to improvement |

| − | |Concept | + | | style="background-color:#FF0000;" | Concept |

| − | |Discarded | + | | style="background-color:#FF0000;" | Discarded |

|- | |- | ||

|31 | |31 | ||

|RPAM | |RPAM | ||

| style="background-color:#92D050;" | Implemented and running | | style="background-color:#92D050;" | Implemented and running | ||

| − | |Acceptable, but subject to improvement | + | | style="background-color:#FFFF00;" | Acceptable, but subject to improvement |

| − | |Piloted | + | | style="background-color:#FFFF00;" | Piloted |

| − | |Useful | + | | style="background-color:#FFFF00;" | Useful |

|- | |- | ||

|32 | |32 | ||

| Line 379: | Line 376: | ||

| style="background-color:#00B050;" | Completely aligned with current EU policies | | style="background-color:#00B050;" | Completely aligned with current EU policies | ||

| style="background-color:#00B050;" | EU infrastructure, broadly accepted | | style="background-color:#00B050;" | EU infrastructure, broadly accepted | ||

| − | |Discarded | + | | style="background-color:#FF0000;" | Discarded |

|} | |} | ||

Following is the analysis of the results from the overall assessment from the aspect of the pilots’ needs, the conceptual framework and the overall architectural principles. It is important to note that this is not the exhaustive set of gap analysis, but it serves as a proof of concept for the methodological and assessment choices decisions made. | Following is the analysis of the results from the overall assessment from the aspect of the pilots’ needs, the conceptual framework and the overall architectural principles. It is important to note that this is not the exhaustive set of gap analysis, but it serves as a proof of concept for the methodological and assessment choices decisions made. | ||

| Line 414: | Line 411: | ||

[[File:EAAF Maturity levels.png|alt=EAAF Maturity levels|center|frame|EAAF Maturity levels]] | [[File:EAAF Maturity levels.png|alt=EAAF Maturity levels|center|frame|EAAF Maturity levels]] | ||

In the context of DE4A, we will provide such assessment in the second phase of implementation of this methodology, as currently there is no definite list of BB matched to the specific pilots. | In the context of DE4A, we will provide such assessment in the second phase of implementation of this methodology, as currently there is no definite list of BB matched to the specific pilots. | ||

| + | [[Category:wip]] | ||

Latest revision as of 12:52, 21 February 2022

The purpose of the Building Blocks (BB) assessment is to assess the suitability of the BB identified and catalogued in Task 1.5 for use within the DE4A project. Thus, it bridges outputs from WP1 and requirements from WP4 to provide input for the technical, operational and administrative considerations in the architectural assessments carried out here. Over 40 BB have been considered for this assessment, 31 of which are assessed at the first phase of this work. The results of the assessment show that there is a mature and acceptable stock of solution building blocks that can be considered as potential candidates for implementation by the pilots, either in their entirety or partially, with the needed upgrades.

Theoretical background

Objectives and scope

To reach the goal outlined above, this section delves into the architectural evaluation of the building blocks catalogued as useful for DE4A. It is important to note that the term “building block” in the context of this assessment refers to a Solution Building Block in TOGAF sense.

The most important step in assessing the BB is determining the methodology that would support a common description framework of the BB, while providing means for determining the quantitative and/or qualitative evaluation criteria of the considered BB. The outcome of the assessment is a succinct list of recommendations for BB use by the pilots in WP4. In addition to defining the methodology, a gap analysis is performed based on both the pilots’ requirements and the common description framework of the BB, considering the results from the assessment, the project requirements and the common PSA principles. The overall process of conceptual considerations, empirical evaluation, gap analysis and piloting recommendations are denoted as a DE4A generic methodology for architecture building blocks evaluation.

Available methodologies

In order to provide continuity and justification of the methodology that is being developed, we first outline and assess the suitability of the currently available methodologies in view of the implementation context and the objectives of the DE4A project. To that end, both generic EU/EC assessment methodologies and past LSP project-specific methodologies are considered.

Common assessment method for standards and specifications (CAMSS)

CAMSS is part of the ISA² interoperability solutions evaluation toolkit for public administrations, businesses and citizens. It provides a method to assist in the assessment of ICT standards and specifications. The main objective of CAMSS is achieving interoperability and avoiding vendor lock-in. In that sense, CAMSS criteria evaluate (among other things) the openness of standards and specifications. This is done by employing the CAMSS tools and adapting the evaluation according to the needs of an individual Member State.

In the context of DE4A, relying solely on CAMSS does not provide the means for BB suitability and gap analysis in relation to the piloting needs. Moreover, it does not provide any selection criteria or a taxonomy for consistent mapping of the different BB onto a common comparable framework.

Past project-specific methodologies

eSENS

eSENS has developed its own methodology for BB assessment, which is mainly an adaptation of CAMSS and Asset Description Metadata Schema (ADMS), supported by inputs of the eSENS deliverable D6.1 (see Table 5 in eSENS D3.1). Its objective is to propose a documentation of format and defining criteria for the maturity and sustainability assessment of building blocks. The overall framework consists of three steps: 1) The Consideration step; 2) The Assessment step; and 3) The Recommendation step and produces a list of assessment criteria to be used for BB evaluation. These criteria, however, are very general and not architecture-specific – their applicability is valid and valuable only if used in collaboration with the legal, business, organizational, technical and implementation team.

It is important to note that the assessment methodology employed in eSENS is developed with a different aim from ours – its analysis and recommendations refer to the desired BB qualities that are needed to ensure meeting the maturity levels and the sustainability criteria envisaged by the project. Thus, although it produces guidelines for assessment, it does not provide concrete output in terms of actual scores, analysis and recommendations for BB. Moreover, it does not provide a comparable baseline when multiple BB have to be considered for the same pilot and it is based on the assumption that the existing BB represent the exhaustive list of possible solutions from which a suitable match should be chosen. In the case of DE4A, such an assumption does not hold, as there may be a case where a certain BB is not mature enough to be recommended for piloting but is also not to be completely disregarded either. More importantly, the methodology developed here is used for actual assessment and is to be fine-tuned at a later stage in connection to the general architecture lifecycle development.

TOOP

Like eSENS, the overall idea of the TOOP assessment methodology is to reuse existing frameworks and building blocks provided by CEF, eSENS, and other initiatives. First, an initial inventory of existing e- Government building blocks is proposed. Then, the principles of selection of building blocks for OOP applications are provided, together with high-level views of the architecture. Finally, an analysis of selected building blocks is done with respect to their relevance, applicability, sustainability, need for further development and external interfaces.

The main criteria for inclusion of a building block in TOOP are:

- The specific project requirements;

- The TOOP pilots’ needs;

- Usability in long-term applications (maintenance and support provided).

As a result, the building blocks are categorized into three basic groups:

- BB that provide capabilities needed by all or most TOOP Pilot Areas;

- BB that provide capabilities needed or probably needed by some TOOP Pilot Areas;

- BB that provide capabilities not needed by the TOOP Pilot Areas.

TOOP’s criteria are tightly bound to the piloting needs, whereas the rationale behind their choice is OOP-specific rather than generic. The methodology here follows a similar line of reasoning, but differs in the conceptual framework, which is more formally defined and made reusable by other projects as well.

ISA2 Interim Evaluation

The interim evaluation aimed to assess how well the ISA² Programme has performed since its start in 2016 and whether its existence continues to be justified. Based on stakeholders’ views, opinions and public consultation, it evaluated the implementation of the programme based on seven criteria and identified several points for improvement.

The evaluation criteria considered were: Relevance (the alignment between the objectives of the programme and the current needs and problems experienced by stakeholders); Effectiveness (the extent to which the programme has achieved its objectives); Efficiency (the extent to which the programme’s objectives are achieved at a minimum cost); Coherence (the alignment between the programme and comparable EU initiatives as well as the overall EU policy framework); EU added value (the additional impacts generated by the programme, as opposed to leaving the subject matter in the hands of Member States); Utility (the extent to which the programme meets stakeholders’ needs); and Sustainability (the likelihood that the programme’s results will last beyond its completion).

However, the interim evaluation does not provide a specific methodology – either in terms of criteria choice, or in terms of architectural or future piloting recommendations. Its value lies mainly in the identification of possible gaps that exist within the current EU architecture framework even prior to the implementation of the available building blocks. In that sense, the main recommendations for prospective actions are determined in awareness raising beyond national administrations; moving from user-centric to user-driven solutions; and working towards increased sustainability.

Our work integrates the interim evaluation criteria even at the stage of cataloguing BB relevant in DE4A context. More importantly, it takes into consideration the methodological gaps identified in the assessment in terms of awareness, user-driven solutions and sustainability prescriptions and integrates specific technical, administrative and operational aspects in the recommendation’s extraction for the pilots.

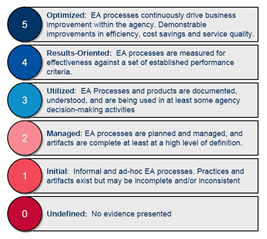

EAAF

The Enterprise Architecture Assessment Framework (EAAF) assists the US government in the assessment and reporting of their enterprise architecture activity and maturity, as well as in the advancement of the use of enterprise architecture to guide political decisions on IT investments. In addition to providing the methods for the assessment, EAAF also identifies the measurement areas and criteria by which government agencies are to rely on the architecture to drive performance improvements. This is integrated into the so-called Performance Improvement Lifecycle, where points for improvement are identified and translated into specific actions.

In that sense, the framework provides a good overall methodology for the assessment of DE4A BB. Following its guidelines, in order to perform the technical assessment, the architects, together with the relevant project partners (mainly from WP1 and WP4):

- Identify and prioritize the BB considering the pilots’ needs and in view of the project goals and objectives;

- Determine specific methodological steps for gap analysis, using common or shared information assets and information technology assets;

- Quantify/qualify and assess the performance to verify compliance with pilots’ requirements and provide report on gap closure; and

- Assess feedback on the pilots’ performance in order to enhance the architecture and fine-tune the assessment methodology for future implementation decisions.

Methodological considerations

The need to develop a generic methodology that integrates some aspects of the standardized methodologies, but does not rely on a single one, is based on several considerations:

- The assessment methodologies currently available either focus on alignment of the BB specifications or are only concerned with the maturity of the solution provided by the building blocks;

- They do not provide a clear definition of the common principles for assessment;

- They do not allow for a phased-approach to the assessment and are applicable either for a single BB or for a finalized solution architecture (Note: Although EAAF prescribes the principles for a phased assessment, it does not delineate the phases explicitly and only gives a requirement for the overall outcome of the assessment).

As a result, the architect is prevented from developing an assessment for multiple BB with varying levels of complexity and is also disabled to perform comparative evaluation for determining the best fit for a particular solution architecture.

The methodology developed here is novel in that it addresses the points above and is also generic in the sense that it can be reused by other large-scale projects for similar purposes. It incorporates the assessment principles of existing standards-based methodologies (like CAMSS and EAAF) taking into account the architecture feasibility and sustainability, but it also generalizes these principles over the context of implementation required by DE4A.

Methodology

In order to account for both the piloting recommendations criteria and the performance assessment criteria, the overall methodology requires a phased approach. Therefore, it consists of two phases:

I) The first phase takes stock of the entire list of BB that can have potential use in the project and as part of the piloting. Then, a conceptual and an empirical framework for evaluation is developed – the former enables the gap analysis of the BB, whereas the latter allows for qualitative and comparative analysis of the BB, as well as extraction of concrete recommendations for piloting. The first phase essentially corresponds to the first three points of the EAAF.

II) The second phase will account for the complete list of project artefacts and will provide empirical validation for the results and recommendations from the first phase. In addition, reassessment of the previous gaps will be performed. This phase will mainly be realized in close collaboration with the pilots: direct feedback via surveys and questionnaires on BB performance will be obtained and the initial conceptual framework will be fine-tuned accordingly. The second phase corresponds to the last point of the EAAF.

Conceptual framework

In this section, we first catalogue the BB that are to be considered by the assessment. This step considers the output from D1.5 and establishes a relation to the internal project environment. Then we establish a common conceptualization of the key elements, which is based on the Digital service model, Section 2.2 of the Study on "The feasibility and scenarios for the long-term sustainability of the Large Scale Pilots”. With that, a relation to the external project environment is established. Finally, a basic assessment framework is developed to enable the grading of the BB from several maturity aspects: technical, administrative and operational. The output of the assessment will allow us to perform gap analysis and will also guide the extraction of the piloting recommendations.

The Digital Services Model: A five-layered approach

The LSPs so far have developed building blocks that enable cross-border interoperability based on standards, specifications and common code/components. Therefore, moving beyond the pilot projects and towards actual deployment, it is crucial to develop a structure in which the digital services and the elements they are composed of can be conceptualized.

To establish a conceptual model, it is important to clearly set out the key terminology that is used in relation to the DSI for the provision of cross-border public services. CEF provides an overarching framework suitable for this purpose, called Digital Services Model (DSM). It takes into account the deliverables of the LSPs, the stakeholders and roles they can take on, and the drivers behind the dynamics of this complex ecosystem.

The Digital Services Model is not only needed to establish common terminology and framework, but it is also necessary to analyse the needs and requirements for the future deployment of any digital services, enabling a continuity of the developed methodologies with the LSPs. Thus, it presents the different levels of granularity which need to be taken into consideration for the overall management of the DSI for the provision of public Services.

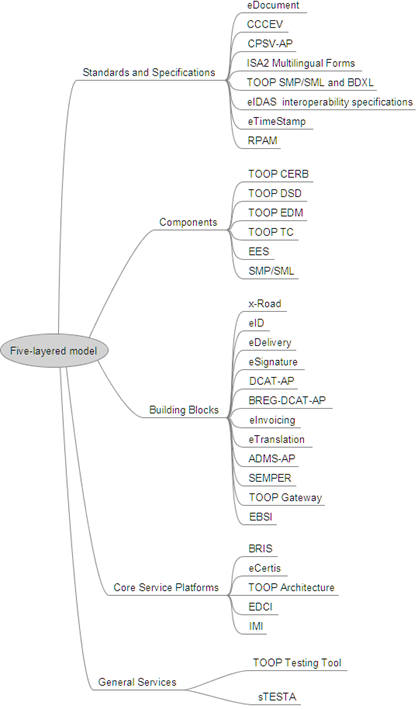

The following elements represent the main part of the DSM taxonomy:

- Standards and Specifications;

- Common code or Components;

- Building Blocks;

- Core Service Platforms;

- Generic Services.

Standards and Specifications have been used by all the LSPs for the development of the digital services. These standards and specifications play a central role in interoperability as it means that systems have commonalities in key areas, enabling systems to communicate with one another.

Components are the common code that has been developed for the building blocks. Building blocks are made up of several components (e.g. a timestamp component/functionality). These are often referred to as modules in the deliverables of the LSPs. Component can either be BB-specific or used in several BB.

Essentially, all the solutions derived from the LSPs are ultimately building blocks in the sense that they are services that can be integrated as part of other services. Given the fact that these building blocks have the most obvious potential for reuse across different domains (or Core Service Platforms) these can be seen as a specific layer as part of the set of digital services.

Core Service Platforms enable the provision of cross-border digital services in different domains, like eHealth, eJustice and eProcurement. These are the platforms where all the different BB for a specific service (e.g. eHealth services or eID services) are brought together and made available, enabling service providers to take up and reuse the services as part of their own services. The Core Service Platform (CSP) level should eventually enable the Member States to interact with other Member States through the use of building blocks (via the Generic Services).

It is important to determine what building blocks have been developed by an LSP, as well as which of these are CSP-specific and which are reusable. The CSP-specific blocks are called domain blocks (e.g. ePrescription is specific to eHealth) and the reusable blocks are called building blocks (e.g. eID can be reused in various domains).

The reusable building blocks are the strongest common element between the various CSPs. They therefore need to meet the needs and requirements of all the CSPs. This underlines the links between the building blocks and the CSPs, and the need to manage both of these simultaneously.

Generic Services is the level of abstraction at which the Member States integrate or connect to the CSPs. These interconnections are necessary to link up a Member State so it can provide cross-border access and use of national eIDs, electronic health records, national procurement platforms, national eJustice platforms and public services for foreign business. Each Member State has to ensure that these existing systems at national level are linked up with the CSPs through Generic Services in order to be cross-border enabled.

To define a common taxonomy for BB description prior to the actual assessment, the relevant BB are catalogued in view of the five-layered model described above. This is represented in the figure below (Taxonomy of Building Blocks).

Although implicitly understandable, it is worth noting that some BB can belong to two different categories, as their application is largely context dependent. In other words, whereas in one context a certain BB can be seen as a Standard/Specification, in another context it can be a Component. In those cases, the more general category is assigned to that BB, giving priority to show its potential rather than its most common use.

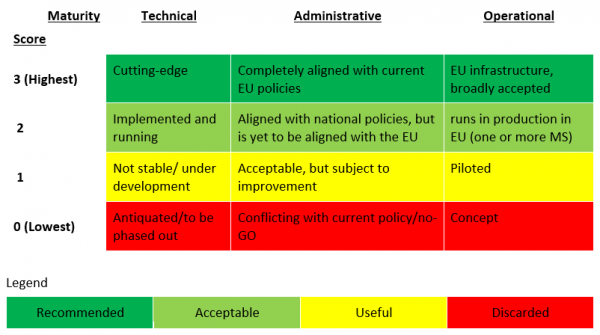

Assessment criteria

In this section, a basic assessment framework is presented composed of the criteria that guide the expert scoring of BB from the aspect of technical, administrative and operational maturity. The criteria essentially represent a matrix indexed by two dimensions: Score and Maturity aspect. These dimensions, together with the semantics of the criteria (the matrix-cells) are shown in the table below (Conceptual BB assessment framework). For better graphical representation of the empirical assessment that will be performed in the next section, each row is represented by a colour, visually grasping the overall maturity of a certain building block.

The colouring of the framework is not important only for the visual appeal of the reader; rather, it is meant to serve as a concrete input (an additional dimension) for the prospective formalization of the assessment framework. Such formalization would enable a semi- and, ultimately, a fully automatic maturity and quality attributes assessment of both a set of desired (composable) BB, as well as a solution architecture representing a Common Service Platform or a General Service per se.

It is important to note that the framework represented above is a simplified version of the generic assessment framework that will be the final contribution by WP2. This is because at this stage it cannot be expected that all necessary information by the pilots is obtained for an overall architecture evaluation to take place. Such assessment will be performed in the second phase of the BB assessment task, when the framework from Table 2 will also be further revised and fine-tuned. As a result, the gap analysis in the second phase will take into account the exhaustive set of pilots’ requirements and the pilots’ feedback on the implemented BB.

Empirical framework

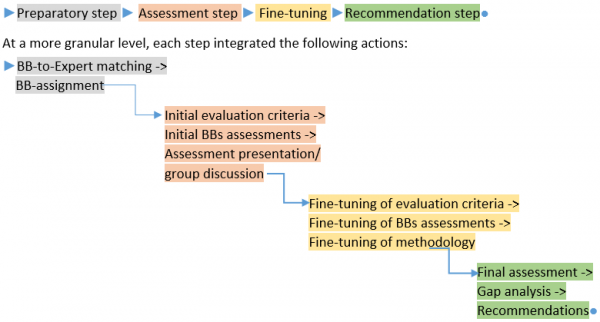

The empirical framework is essentially an implementation of the conceptual framework with concrete recommendations as an output. While the conceptual framework is based on well-standardized assessment methodologies, the (input to the) empirical evaluation of the BB relies largely on expert opinions and judgements. To close the subjectivity gap between the experts’ evaluation criteria, the BB Assessment group defined an internal process of iterative calibration on the evaluation criteria, as represented by the process flow below:

In the Preparatory step, the building blocks were matched to the experts’ experience and expertise with respect to the capabilities provided by each BB and the architecture principles outlined by the DE4A objectives. One or more groups of BB with shared capabilities were then assigned to each expert for assessment.

In the Assessment step, the initial evaluation criteria were agreed upon and integrated into the basic assessment framework. Then, the results from the initial assessments for each BB were presented in front of the BB Assessment group. This allowed for a constructive discussion on the need to fine-tune the evaluation criteria and to revise the evaluation results. These considerations are part of the Fine-tuning step, which is essentially an iterative procedure on its own, until the complete set of evaluation criteria is obtained, and the BB scores are approved by all experts of the BB Assessment group.

In the Recommendation step, the final scores for each BB were provided for all three maturity aspects: Technical, Administrative and Operational. These are then analysed in view of the piloting requirements and the DE4A objectives as part of the Gap analysis, enabling the extraction of a single Recommendation as an output from the overall process.

The output of the preparatory step is the Taxonomy of BB, whereas the output of the Assessment step is the conceptual framework – further whose criteria, aspects and semantics were fine-tuned in the third step. The scores and recommendations are obtained as an output from the final (Recommendation) step, supported by the argumentation given in the Gap Analysis. For a more complete overview of the final scores and recommendation, they are succinctly represented altogether in the BB recommendations table below.

Recommendations and Gap Analysis

This section summarizes the assessment for each building block, by aspect and with an overall recommendation grade. A recommendation is essentially the expert opinion based on the results from the conceptual framework, the gap analysis and in relation to the piloting needs and requirements. The overall list of assessed BB is catalogued as follows:

| # | Building Block | Technical Maturity | Administrative Maturity | Operational Maturity | Recommendation |

| 1 | eDelivery | Implemented and running | Completely aligned with current EU policies | runs in production in EU (one or more MS) | Recommended |

| 2 | eID | Implemented and running | Completely aligned with current EU policies | runs in production in EU (one or more MS) | Recommended |

| 3 | eSignature | Cutting-edge | Completely aligned with current EU policies | runs in production in EU (one or more MS) | Recommended |

| 4 | CCCEV | Implemented and running | Completely aligned with current EU policies | Piloted | Acceptable |

| 5 | CPSV-AP | Implemented and running | Completely aligned with current EU policies | runs in production in EU (one or more MS) | Acceptable |

| 6 | eCertis | Implemented and running | Completely aligned with current EU policies | EU infrastructure, broadly accepted | Recommended |

| 7 | eIDAS interoperability specifications | Implemented and running | Completely aligned with current EU policies | runs in production in EU (one or more MS) | Acceptable |

| 8 | sTESTA | Implemented and running | Completely aligned with current EU policies | EU infrastructure, broadly accepted | Discarded |

| 9 | x-Road | Implemented and running | Aligned with national policies, but is yet to be aligned with the EU | runs in production in EU (one or more MS) | Useful |

| 10 | DCAT-AP | Implemented and running | Completely aligned with current EU policies | EU infrastructure, broadly accepted | Acceptable |

| 11 | BREG-DCAT-AP | Not stable/ under development | Acceptable, but subject to improvement | Piloted | Acceptable |

| 12 | ISA2 Multilingual Forms | Implemented and running | Completely aligned with current EU policies | runs in production in EU (one or more MS) | Acceptable |

| 13 | EBSI (CEF Blockchain) | Not stable/ under development | Acceptable, but subject to improvement | Piloted | Useful |

| 14 | eInvoicing | Implemented and running | Completely aligned with current EU policies | EU infrastructure, broadly accepted | Recommended |

| 15 | eTranslation | Implemented and running | Completely aligned with current EU policies | EU infrastructure, broadly accepted | Recommended |

| 16 | SEMPER | Not stable/ under development | Acceptable, but subject to improvement | Piloted | Recommended |

| 17 | BRIS | Implemented and running | Completely aligned with current EU policies | EU infrastructure, broadly accepted | Discarded |

| 18 | TOOP Architecture | Implemented and running | Aligned with national policies, but is yet to be aligned with the EU | Piloted | Recommended |

| 19 | TOOP CERB | Not stable/ under development | Acceptable, but subject to improvement | Piloted | Useful |

| 20 | TOOP DSD | Not stable/ under development | Acceptable, but subject to improvement | Piloted | Acceptable |

| 21 | TOOP eDelivery [SMP/SML and BDXL] | Cutting-edge | Aligned with national policies, but is yet to be aligned with the EU | runs in production in EU (one or more MS) | Recommended |

| 22 | TOOP EDM | Not stable/ under development | Acceptable, but subject to improvement | Concept | Acceptable |

| 23 | TOOP TC | Implemented and running | Aligned with national policies, but is yet to be aligned with the EU | Piloted | Recommended |

| 24 | TOOP Gateway | Cutting-edge | Aligned with national policies, but is yet to be aligned with the EU | runs in production in EU (one or more MS) | Recommended |

| 25 | TOOP Testing Tool | Not stable/ under development | Acceptable, but subject to improvement | Piloted | Acceptable |

| 26 | ADMS-AP | Implemented and running | Completely aligned with current EU policies | EU infrastructure, broadly accepted | Useful |

| 27 | EDCI | Not stable/ under development | Acceptable, but subject to improvement | Piloted | Useful |

| 28 | EES | Not stable/ under development | Aligned with national policies, yet to be aligned with the EU | Concept | Useful |

| 29 | eTimeStamp | Not stable/ under development | Completely aligned with current EU policies | Concept | Recommended |

| 30 | eDocument | Not stable/ under development | Acceptable, but subject to improvement | Concept | Discarded |

| 31 | RPAM | Implemented and running | Acceptable, but subject to improvement | Piloted | Useful |

| 32 | SMP/SML | Cutting-edge | Completely aligned with current EU policies | runs in production in EU (one or more MS) | Recommended |

| 33 | IMI | Cutting-edge | Completely aligned with current EU policies | EU infrastructure, broadly accepted | Discarded |

Following is the analysis of the results from the overall assessment from the aspect of the pilots’ needs, the conceptual framework and the overall architectural principles. It is important to note that this is not the exhaustive set of gap analysis, but it serves as a proof of concept for the methodological and assessment choices decisions made.

Pilots needs’ considerations

From the table above, it can be noted that, although some of the BB are assessed as immature from some aspect, the final recommendation is still for them to be used by the pilots. This is due to the fact that, regardless of the current state of maturity, when matched with the pilots’ requirements, some a BB may still have the necessary architecture capabilities that require adjustment in the DE4A context. For instance, this is the case with SEMPER. The SEMPER extension to eIDAS is not fully mature yet but is the only cross-border functionality for working with proxies that currently mandates successfully piloted. Otherwise, there will be a need to develop a DE4A-specific solution for cross-border powers validation from the scratch, which is bot not useful and not feasible within the project timeframe. In order for SEMPER to gain a broader user-base, it has to be validated by more Member States (currently, it has been piloted with 4 MS). In addition, SEMPER has been piloted with legal persons only. Although this is sufficient to the DBA pilot, this is not the case for the moving abroad and studying abroad, as there is a need for piloting with natural persons. The DE4A pilots themselves may be used for this. Finally, SEMPER extends eIDAS, but has not been handed over to DIGIT yet. Therefore, it has not been incorporated in the eIDAS reference software of DIGIT yet. Integration in eIDAS will improve sustainability of the SEMPER extension. Concretely, SEMPER would benefit from eIDAS-like specifications to allow Member States that do not use the eIDAS reference software to develop their own extension based on these specifications.

In contrast to SEMPER, there is also a case where a BB may be assessed as completely mature in most of the aspects but is still disregarded at the Recommendations stage. This is, for instance, the case with the IMI BB, which despite the overall high maturity in all aspects is out of the scope of the DE4A project. Similarly, the BRIS building block has a scope that is much narrower (especially from an administrative perspective) than the scope of DE4A and is therefore not to be used by the pilots. More concretely, BRIS has been developed for inter-business register communication, which is not the primary focus of the DBA pilot (the functional shortcomings on BRIS for piloting in DBA (D4.5, annex V) have been confirmed by DG DIGIT and there is no BRIS-roadmap foreseen that will deliver a solution to the findings). Furthermore, it is legally not feasible to use the BRIS-network for the DE4A-pilots (currently not allowed for non-business registers). An alternative is being discussed with DIGIT: a message broking platform as a possible future BRIS-wide solution that is piloted in TOOP. However, funding for continuation of the platform is not arranged and the current intention is to bring the (cloud-based) prototype infrastructure down when TOOP testing ends.

Similar reasoning in compiling the overall recommendation score is applied to the other building blocks. These considerations have been discussed in detail at the BB Assessment Group meetings during the Recommendation step of the empirical framework. It is out of scope of the deliverable to present a detailed overview of each BB. However, the subtlety of some of the assessment criteria (such as context dependence) is also an argument to justify the decision to rely only on the experts’ evaluations in the first phase of the methodology.

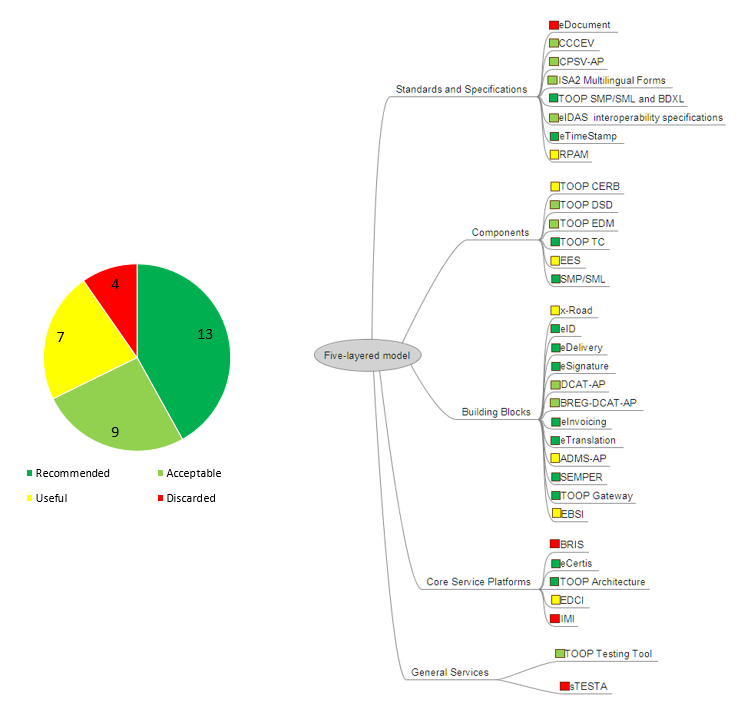

Assessment outcomes considerations

Out of the 33 assessed BB, 13 BB are Recommended for implementation, 9 are Acceptable, 7 are Useful and 4 are Discarded. From a maturity point of view: in terms of technical maturity, 5 are cutting-edge, 18 are implemented and running in an operational environment, whereas 10 are not stable or under development. From an administrative maturity aspect, almost half (17) of the BB are completely aligned with current EU policies; 7 are aligned with national policies but are yet to be consolidated at an EU level, whereas 9 (although acceptable) are still subject to further improvements.

From operational maturity aspect, there are 8 BB which are broadly accepted as part of an EU infrastructure, 12 that run in production in one or more Member States and 10 that are piloted within some kind of operational or testing environment. It is interesting to note that the only aspect by which a BB has been assessed as immature is the Operational aspect. Thus, there are 4 BB (TOOD EDM, EES, eTimeStamp and eDocument) that are marked as operationally immature, as they are only at the stage of a Concept and are yet to be developed.

From a BB type aspect (also visible in the figure above), most of the recommended BB are of the type ‘Building Block’ (7 out of 13) and ‘Standards and specifications’, whereas the ‘General services’ and the ‘Core service platforms’ are the least numerous and the most immature. This is expected, as the later are also the most complex ones and only few in number across EU.

It is also notable that most of the BB considered for use by the pilots are in a mature and useful state that allows reusability and potential upgrades before implementation in the DE4A pilots. Such considerations are already being made (as discussed in the previous subsection) and are part of the piloting requirements to be assessed in the second phase of the methodology.

Architecture framework considerations

As outlined in the methodological considerations above, the two phases of the overall methodology follow the Enterprise Architecture Assessment Framework principles, with the additional step of providing recommendations for the pilot in between the two phases. Such an approach allows, in addition to the qualitative evaluation, to obtain e quantitative score for the maturity of the overall architecture as a (sub)set of the assessed BB. In that sense, while the output of the EAAF is a maturity level assessment of the overall architecture, the phased approach in DE4A creates an intermediate feedback loop between the pilots and the Project Start Architecture, allowing for adaptable integration of the assessment methodology within the WP2 change management.

Regardless of the incomplete overall assessment, it is still possible to have a quantitative assessment for a solution architecture after the first assessment phase. However, this is not to be considered as the overall architecture framework maturity level, but only as a ‘current maturity level’ of the solution architecture comprised of a given set of BB. Such value can serve as a reference point to be compared upon a given KPI for the overall maturity, in case there is such requirement.

In order to compile a single value for the current maturity level for a pilot solution architecture, the set of BB assessments and their recommendations shall be compared upon the baseline for the EAAF maturity levels.

In the context of DE4A, we will provide such assessment in the second phase of implementation of this methodology, as currently there is no definite list of BB matched to the specific pilots.