DE4A Playground

Introduction

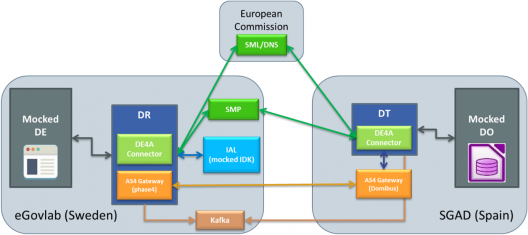

As the necessary infrastructure setup is quite complex, WP5 designed the “DE4A Playground” which helps pilot partners to onboard the DE4A network. The Playground is a set of predefined and pre-deployed components to be used as is. It is essentially a temporary infrastructure with two purposes:

- It simulates a real scenario where the User can request data (evidence) about a subject (citizen or company). Thus, it allows testing the performance of the DE4A Connector by using mocked components and fake data.

- It allows DE4A pilot participants to deploy their own infrastructure in an orderly manner. The internal document “Playground joining stages and information required from pilot partners” sets out a three-stage process for deploying their software components: first their own developed entities (DE/DO), secondly the DE4A Connector provided by WP5, and finally the SMP of the eDelivery infrastructure. At the end of each stage, the pilot partner can test their deployment by making used of the rest of the Playground components.

The target audience for this chapter are primarily software developers that try to connect their DE/DR/DT/DO system to DE4A.

The Playground consists of the following components:

- Data Evaluator

- DE4A Connector with the role of Data Requestor, using the phase4 AS4 Gateway

- DE4A Connector with the role of Data Transferor

- Data Owner

- Domibus AS4 Gateway

- SMP

- Mocked IDK

- Kafka Server

The DO and the DT are deployed at SGAD (ES). All other components, including the Kafka Server, are deployed at eGovlab (SE).

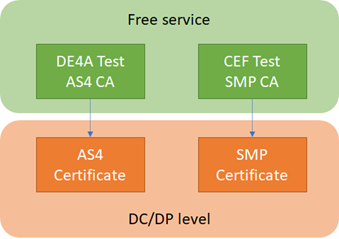

Electronic Certificates

Most of the components used require the HTTPS protocol, so TLS certificates are needed. A separate document, “Policy for Transport Security”, describes the requirements on them. Additionally, the SMP and the AS4 gateways require each a separate certificate for signing and encrypting the payload. For this purpose, we required two separate Certificate Authorities (CAs) – one for the AS4 Gateway and one for the SMP component.

The above figure depicts the layout of the CAs. For the “DE4A Test AS4 CA”, eGovlab created a new root CA that provides AS4 certificates. This simple CA can be used to issue as many certificates as we like, but it’s only suitable for piloting purposes. For a production exchange DE4A would need to search a commercial solution. For the SMP CA we needed to use a CA that matches the requirements of CEF, as the maintainers of the Service Metadata Locator (SML). To fulfil our urgent needs for a suitable CA, CEF was kind to offer us 10 SMP certificates based on their trusted “Connectivity Test CA”. In parallel to that, we promised CEF to search a commercial CA that we could use as a sustainable solution for SMP certificates. The requirements are outlined in the “Trust Models Guidance” document of CEF.

Data Evaluator and Data Owner

The DE and DO are both set up in the DE4A Playground. These components are deployed to act as a starting point where the pilots can interact with the Playground.

Data Evaluator

The Data Evaluator deployed in the Playground was developed as a separate piece of software by WP5. The source code can be found at https://github.com/de4a-wp5/wp5-demo-ui and the deployed instance of this software is available at https://de4a-dev-mock.egovlab.eu/public.

All the IEM handling functionality is taken from the shared “de4a-commons” library available at https://github.com/de4a-wp5/de4a-commons.

Note: the functionality of this tool may be extended during the further development of the project. The primary goal is to support Member States in onboarding as good as possible.

Note: the terminology used in the demo application is very simplified and may not suffice the needs of real-world applications. Real pilot applications should align the texts with their local needs – recommendations may be provided by WP7.

System requirements

The DE4A WP5 Demo UI is a web application that exclusively runs in the browser. The user interface is based on the Bootstrap 4.6 framework and the system requirements for using it are documented at https://getbootstrap.com/docs/4.6/getting-started/browsers-devices/

User interface

The basic user interface of the DE4A WP5 Demo UI is separated in 4 different parts:

- The “header” that contains the application title as well as the breadcrumbs

- The “menu” that shows the available functionality – each of the menu items brings up different functionality and is described below.

- The “content area” that contains the main interaction area with the user and shows content, depending on the chosen menu item (see step 2).

- The “footer” contains static information and a brief disclaimer

Functionality overview

The Data Evaluator is separated into two main areas:

- the “Data Evaluator” testing part, that can be used to send real test messages

- the “Demo UI” part, that contains mainly support functionality for software developers

An overview over the available functionality is provided below, listed per menu item

- “Data Evaluator” – contains a tree with all child pages

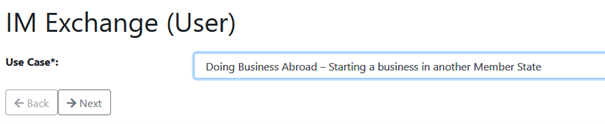

- “IM Exchange (User)” – initiate a new evidence request using the Intermediation (IM) pattern. The necessary field values are collected in a wizard-style approach. This is one of the key functionalities and described in further detail below. This functionality is only compatible with the Doing Business Abroad (DBA) pilot.

- ”IM Exchange (Expert)” – initiate a new evidence request using the IM pattern. This page requires the user to provide the final XML payload to be send and offers no graphical guidance. By default, a random message is provided as the basis.

- “USI Exchange (User)” – initiate a new evidence request using the User Supported Intermediation (USI) pattern. The necessary field values are collected in a wizard-style approach. This is one of the key functionalities and described in further detail below. This functionality is compatible with the Studying Abroad (SA) and Moving Abroad (MA) pilots.

- “USI Exchange (Mock)” – this is a relic of an early development stage, in which we tested the redirect functionality. This page does NOT use the Connector for message exchange but uses a proprietary interface of the Mock DO. This legacy will be removed for the next iteration.

- “Demo UI” – contains a tree with all child pages

- “Send Random Message” – this page lets you create a random message for all known piloting scenarios and send it to an arbitrary endpoint URL. This can be used to test inbound messages on DE and DO side. One key drawback here is that the message itself is not visualized.[GS3] [PH4] This functionality will be removed for the next iteration.

- “Send Message” – this page lets you send a user provided XML message to a customizable endpoint URL. Each provided XML message is validated against the XML schemas of the selected pilot scenario.

- “Create Random Message” – on this page, random messages according to specific pilot scenarios can be created. The resulting XML message is displayed to the user for further use. The output of this page can e.g. be used on the “Send Message” page to send it to an HTTP endpoint.

- “Validate Message” – on this page arbitrary XML messages can be validated against selectable pilot scenarios. This page only verifies if a message is valid or invalid according to the defined XML schemas.

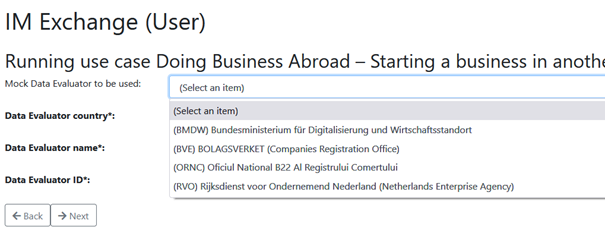

The content of the pages “IM Exchange (User)” and “USI Exchange (User)” are now elaborated in more detail. As they are very similar, only the differences are outlined. The general flow for the user is like this:

- Enter all necessary data in a web form

- Preview the collected data before sending to give explicit consent

- Send the request to the DO

- Preview the received data (on DE side for the IM exchange, on DO side for the USI exchange)

The process starts with the selection of the Use Case to be performed:

The next step is the selection of the Data Evaluator the user wants to test. For simplicity a set of Mock DEs is provided, from which the user can select one. Alternatively, each field can also be filled manually by the user. In a real-world application these data elements will most likely be provided as constant values by the DE application.

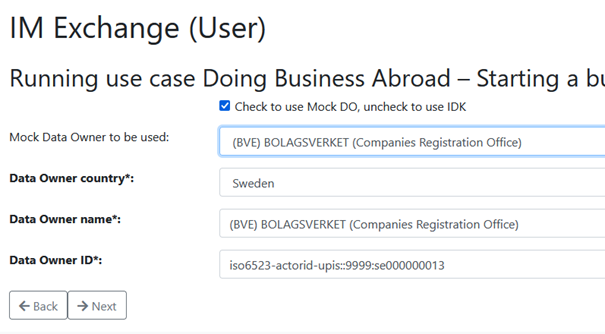

After the DE details have been provided, the DO details must also be provided. Here the user again has the possibility to select from a set of Mock DOs or provide the details manually. Additionally, the Mocked IDK can be queried, based on the country code of the DO. Note: on the USI exchange, also the “Data Owner redirect URL” of the DO must be provided. This field is not available for the IM exchange.

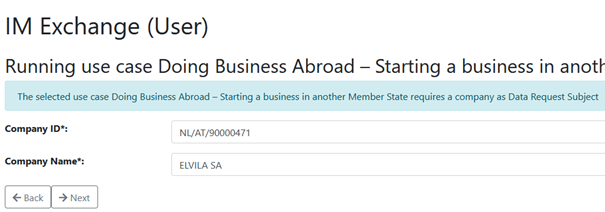

The following steps deals with the details of the “Data Request Subject”. Depending on the chosen use case this can be either a Company (Legal person) consisting of a Company ID and a Company name or a Natural person consisting of Natural Person ID, First name, Family Name and Birthday. In both cases the respective ID elements should be formatted in the eIDAS style (<Data Owner Country Code>/<Data Evaluator Country Code>/<ID>).ç

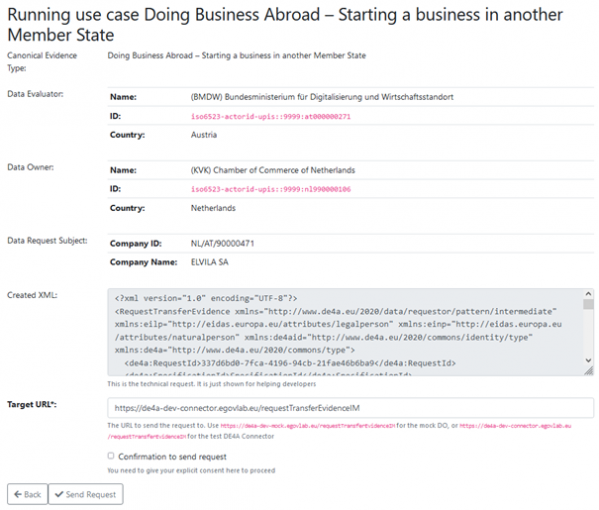

Now all the necessary data is collected and is shown to the user for approval. This view shows both the provided data in a human readable format as well as the created XML document to help developers to create the correct field mapping. The destination to send to is especially queried in the “Target URL” field – the provided URL is the endpoint URL of the DR where it takes requests from DEs. The provided default value is the URL of the Test DR of eGovlab.

Before the user is able to send the request, he must check the checkbox “Confirmation to send request”, otherwise the request is not sent. This should mimic the “Explicit Request” functionality as described by the SDGR. The exact implementation of this consent functionality may also vary from DE to DE.

After providing the necessary consent, the request is sent via the DE4A Connector to the DO. Now the biggest difference between the IM and the USI pattern becomes obvious:

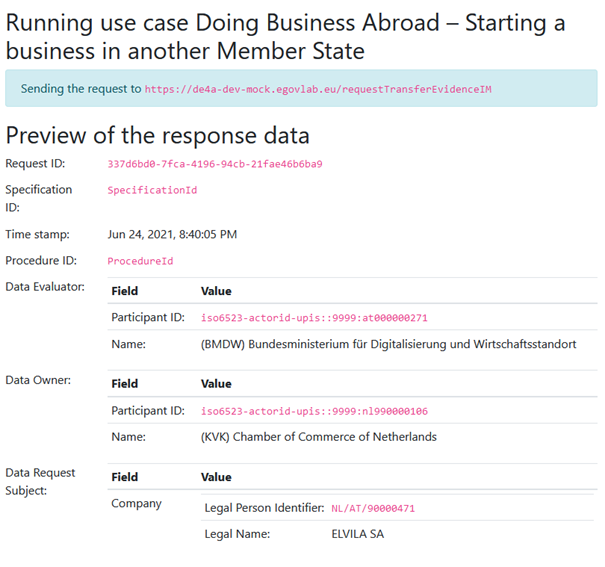

- When using the IM pattern, the data is transferred from the DO to the DE and then visualized at the DE, meaning that the data is transferred anyway. Hence, for this exchange pattern the Demo UI will show the preview.

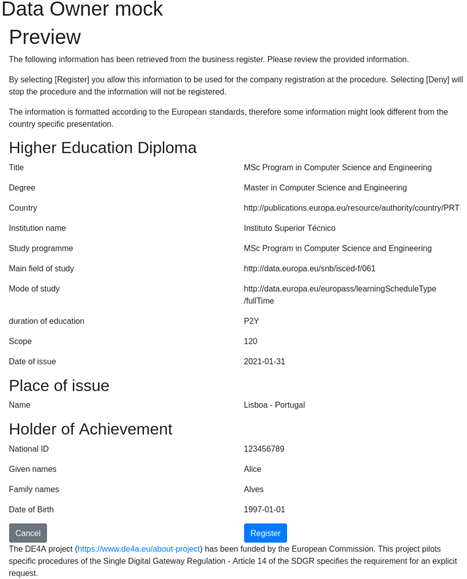

- When using the USI pattern, the user is redirected to a website acting on behalf of the DO and the preview is shown there, meaning that in case of the user not approving the data usage, the data will not be transferred from DO to DE. This implies that the user needs to most likely identify himself a second time in the systems of the DO. Hence, for this exchange pattern the Playground DO will show the preview.

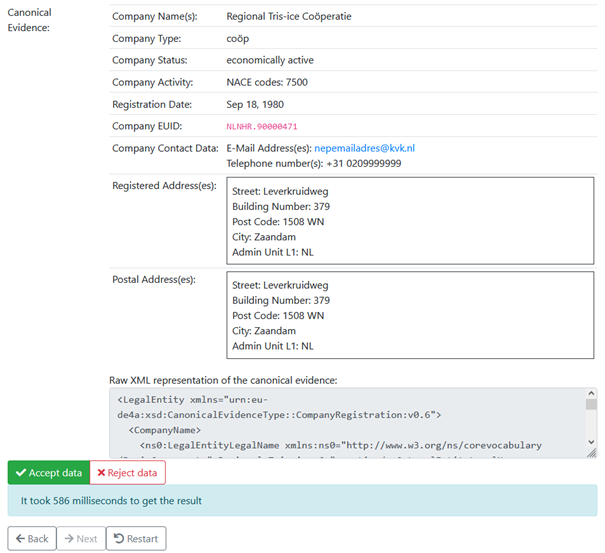

The following figure depicts the preview of an IM pattern request in the Demo UI. It contains the header data of DE, DO and Data Request Subject but also the “Canonical Evidence” retrieved from the DO. The Canonical evidence is again shown in both a human readable format as well as in an XML format to support software developers.

The “Accept data” and “Reject data” buttons shown in the above figure have no impact in the Demo UI, but in the real-world scenario, where this will be the indicator, if the user approves the usage of the data or not.

Data Owner

The Playground DO is deployed with example evidences that it can receive queries for. These are example identifiers from multiple different DOs. For the USI pattern the mocked DO provides a preview functionality, where the user has to consent to sending the evidence before it is sent back to the DE. Together with the DE it provides the user interfaces making the full user journey possible.

Technology used

The DO uses (like the DE) the de4a-commons library for handling all the XML related things.

The DO is deployed as a stand-alone Java 11 application using the Spring Boot framework. The frontend web application needed for the preview functionality is a React.js application bundled by webpack and served by the Spring boot backend.

Data used

The DO uses a dataset of example evidence provided by the pilots. Pilot testing dataset matrix.

Deployment and configuration

Data Evaluator

The mocked DE is deployed together with the Playground DR Connector at eGovlab at https://de4a-dev-mock.egovlab.eu.It is deployed behind an Nginx reverse proxy. The mock DE is configured to send messages to the Kafka server to make it easy to follow the processes when testing.

Point to TLS configuration

The Nginx reverse proxy is set up to terminate the TLS connection. The TLS certificate is from Let’s encrypt and is automatically renewed by the Certbot service.

Data Owner

The mocked DO functionality is performed by an instance of the de4a-connector-mock project [7]. The application allows to configure different parameters related to each role that the mock could assume. In this case, the DO related ones will be listed:

- Endpoints configuration

mock.do.endpoint.im=/requestExtractEvidenceIM mock.do.endpoint.usi=/requestExtractEvidenceUSI

- USI pattern configuration

#The url to where the mock is deployed.

# Currently only used to generate the redirect url for the do preview

mock.baseurl=https://pre-smp-dr-de4a.redsara.es/de4a-mock-connector/

#the base of the path to the preview pages

mock.do.preview.endpoint.base=/preview/

#path to the preview index page, append to the base path to get the full path

mock.do.preview.endpoint.index=index

#paths to the preview websocket endpoints, append to the base path to get the full path

mock.do.preview.endpoint.websocket.socket=ws/socket

mock.do.preview.endpoint.websocket.mess=ws/messages

#paths to the preview rest server endpoints, append to the base path to get the full path

mock.do.preview.evidence.requestId.all.endpoint=request/all

mock.do.preview.evidence.redirecturl.endpoint=redirecturl/{requestId}

mock.do.preview.evidence.get.endpoint=request/{requestId}

mock.do.preview.evidence.accept.endpoint=request/{requestId}/accept

mock.do.preview.evidence.reject.endpoint=request{requestId}/reject

mock.do.preview.evidence.error.endpoint=request{requestId}/error

#path to send the dt to send the request from the do

mock.do.preview.dt.url=http://localhost:31036/de4a-connector/requestTransferEvidenceUSIDT

The configuration above will determine the paths and URLs used in the USI pattern to the previews, redirections and behaviour itself.

The mocked DO is running over an Apache Tomcat 9 server located at: https://pre-smp-dr-de4a.redsara.es/de4a-mock-connector/ For the DO the outbound connection are HTTP POST of ResponseUserRedirection data structures to the URL supplied by the DE in the RequestUserRedirection HTTP POST and if configured to send logs to the Kafka server, either directly via TCP or using the HTTP proxy.