Difference between revisions of "Second phase"

| Line 161: | Line 161: | ||

== Data analysis == | == Data analysis == | ||

In this section, we present the analysis of the obtained data and put the results in both the DE4A and the wider context relevant for reusing the analyzed BBs. We then provide comparative analysis between the two evaluation phases of the BBs carried out in the DE4A project. | In this section, we present the analysis of the obtained data and put the results in both the DE4A and the wider context relevant for reusing the analyzed BBs. We then provide comparative analysis between the two evaluation phases of the BBs carried out in the DE4A project. | ||

| + | |||

| + | === Inventory of BBs and functionalities === | ||

| + | In this part of the survey, we inquired on the new functionalities and requirements defined for the BBs during the project life-time. | ||

| + | |||

| + | In 9 of the 10 cases, there were new functionalities defined for one or more of the implemented BBs, and in 7 of the 10 cases, new requirements as well. This is shown in Figure 1a) and b), respectively. | ||

| + | |||

| + | Of the functionalities, most noticeable were: | ||

| + | |||

| + | * Iteration 2: definition of notification request and response regarding the Subscription and Notification (S&N) pattern; | ||

| + | * Evidence request, DE/DO mocks; | ||

| + | * Average grade element was added to the diploma scheme, due to requirements at the Universitat Jaume I (UJI) to rank applicants; | ||

| + | * Automatic confirmation of messages; | ||

| + | * Canonical data models: additional information to generate other types of evidence; | ||

| + | * Capacity to differentiate between "environments" (mock, preproduction and piloting) in the information returned by the IAL, since in the second iteration we had just one playground for all the environments; and | ||

| + | * Deregistration, multi evidence. | ||

| + | |||

| + | [[File:New functionalities.png|left|thumb]] | ||

| + | [[File:New requirements.png|thumb|239x239px]] | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | Figure 1. a) New functionalities; b) New requirements for the existing BBs defined in DE4A lifetime | ||

| + | |||

| + | Of the new requirements[1], the following were noted: | ||

| + | |||

| + | * Those necessary to define and implement the above functionalities; | ||

| + | * SSI authority agent and SSI mobile agent were updated to simplify the SA UC3 ("Diploma/Certs/Studies/Professional Recognition") service; | ||

| + | * Subscription and Notification pattern, Evidence Request, DE/DO mocks (see project’s wiki solution architecture iteration 2, for requirements regarding Subscription and Notification); and | ||

| + | * Deregistration. | ||

Revision as of 16:21, 27 January 2023

Building Block Assessment - 2nd Phase

The second phase of the assessment of the architecture building blocks (BBs) used in the DE4A project comes after the cataloging of an initial set of relevant BBs for the Project Start Architecture (PSA). While in the previous phase the BBs were assessed for their technical, administrative and operational maturity, in this phase a more constrained set of BBs actually implemented by the pilots was evaluated from a wider perspective.

Methodology

The methodology for evaluation was designed following general systemic principles, with a set of indicators including: usability, openness, maturity, interoperability, etc. The identification of the evaluation criteria and the analysis of the results have been approached from several perspectives: literature survey and thorough desktop research; revision and fine-tuning of the initial BB set and evaluation methodology, and an online questionnaire designed for gathering feedback by the relevant project partners:

- Member States (MSs)

- WP4 partners - Pilots (Doing Business Abroad - DBA, Moving Abroad - MA, and Studying Abroad - SA)

- WP5 partners - for specific components

To revise and fine-tune the evaluation methodology developed in the first phase, as well as the set of BBs selected for assessment as a result, several meetings and consultations were held with the aforementioned project partners. This led to a narrower set of relevant BBs consisting of a subset of the ones assessed in the first phase, and news ones resulting from the piloting needs and implementation. To capture these specificities, a wide set of questions from the survey capture all aspects important for the decisions made and for future reuse. Several iterations over the initial set of questions were performed, determining the relevance according to the roles of the project partners that provided feedback. Finally, a process of feedback coordination was also determined for the pilot leaders, who gathered additional information by the pilot partners’ implementation of some of the BBs (or important aspects of them).

It is important to note that the division of partners according to their role in the project was done due to the difference in the set of BBs that they had experience with. This implies that not each partner evaluated the whole set of BBs, but only the BBs relevant for their practice and research in the DE4A project.

The final set of relevant BBs per partner type is given in Table 1, together with statistics on the number of assessments obtained for each BB.

| # | Building Block | # of assessments on the BB | Relevant partner(s) |

| Common Components | |||

| 1 | eDelivery (data exchange) | 3 | WP5/MSs |

| 2 | SMP/SML | 2 | MSs via pilots |

| 3 | DE4A Connector | 2 | MSs via pilots |

| 4 | DE4A Playground | 6 | DBA/SA/MA |

| Semantic | |||

| 5 | Information Exchange Model | 7 | DBA/SA/MA/WP5 |

| 6 | Canonical Data Models | 6 | DBA/SA/MA DE and DO pilot partners |

| 7 | ESL (implemented as part of SMP/SML) | 1 | WP5 |

| 8 | IAL | 1 | WP5 |

| 9 | MOR | 1 | MA pilot partners |

| eID/PoR | |||

| 10 | SEMPER | 1 | DBA |

| VC Pattern | |||

| 11 | SSI Authority agent | 4 | SA |

| 12 | SSI User agent (mobile) | 4 | SA |

| 13 | EBSI-ESSIF (CEF Blockchain) | 1 | WP5 (T5.4) |

Questionnaire design

This questionnaire aims to analyze the extent and the ways of employment of a list of BBs, whichwere cataloged during the first assessment phase of this task. Through a number of assessment categories and indicators assigned to them, the questionnaire supports a methodology that allows us to qualify and quantify the applicability, functionality, maturity and potential for reusability of each of the BBs, especially in view of the DE4A project.

The questionnaire contains 9 sections, i.e. categories, inquiring on:

- Inventory of BBs and functionalities;

- Interoperability;

- Maturity;

- Openness;

- Ease of implementation;

- Meeting pilot requirements;

- Performance (Non-functional requirements);

- Patterns; and

- Trust, Identity, Security, Privacy, Protection.

The questionnaire was distributed among the relevant (aforementioned) partners, and the feedback has been obtained in the period 28.11.2022 - 12.12.2022. Based on the insights from the provided feedback, we are able to also extract valuable lessons learned through the implementation practices, as well as recommendations for future reuse of the analyzed BBs.

Note: The BB assessment includes the BBs used in both iterations of the pilots. It also includes BBs used in the playground and the mocked DE/DO.

Results and Analysis

Data and preprocessing

After the data gathering period, a total of 10 surveys were received with valid input, representing full coverage of the BBs by foreseen parties (See Table 2). This provides sufficient statistical significance to proceed with the analysis of the results and finalize the BB assessment.

Table 2.Distribution of received valid feedback per partner type

| Partner type | # of assessments done |

| 1 (WP5 representative) | 2 |

| 2 (DBA pilot representative) | 1 |

| 3 (SA pilot representative) | 4 |

| 4 (MA pilot representative) | 1 |

| 5 (MS representative) | 2 |

| Valid | 10 |

Data analysis

In this section, we present the analysis of the obtained data and put the results in both the DE4A and the wider context relevant for reusing the analyzed BBs. We then provide comparative analysis between the two evaluation phases of the BBs carried out in the DE4A project.

Inventory of BBs and functionalities

In this part of the survey, we inquired on the new functionalities and requirements defined for the BBs during the project life-time.

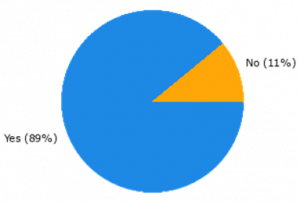

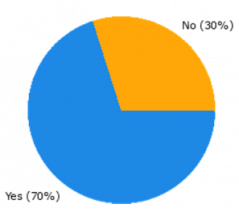

In 9 of the 10 cases, there were new functionalities defined for one or more of the implemented BBs, and in 7 of the 10 cases, new requirements as well. This is shown in Figure 1a) and b), respectively.

Of the functionalities, most noticeable were:

- Iteration 2: definition of notification request and response regarding the Subscription and Notification (S&N) pattern;

- Evidence request, DE/DO mocks;

- Average grade element was added to the diploma scheme, due to requirements at the Universitat Jaume I (UJI) to rank applicants;

- Automatic confirmation of messages;

- Canonical data models: additional information to generate other types of evidence;

- Capacity to differentiate between "environments" (mock, preproduction and piloting) in the information returned by the IAL, since in the second iteration we had just one playground for all the environments; and

- Deregistration, multi evidence.

Figure 1. a) New functionalities; b) New requirements for the existing BBs defined in DE4A lifetime

Of the new requirements[1], the following were noted:

- Those necessary to define and implement the above functionalities;

- SSI authority agent and SSI mobile agent were updated to simplify the SA UC3 ("Diploma/Certs/Studies/Professional Recognition") service;

- Subscription and Notification pattern, Evidence Request, DE/DO mocks (see project’s wiki solution architecture iteration 2, for requirements regarding Subscription and Notification); and

- Deregistration.