Difference between revisions of "Second phase"

(→When) |

|||

| (21 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | + | == Building Block Assessment - 2nd Phase == | |

| + | The second phase of the assessment of the architecture building blocks (BBs) used in the DE4A project comes after the cataloging of an initial set of relevant BBs for the Project Start Architecture (PSA). While in the previous phase the BBs were assessed for their technical, administrative and operational maturity, in this phase a more constrained set of BBs actually implemented by the pilots was evaluated from a wider perspective. | ||

| − | == | + | == Methodology == |

| − | + | The methodology for evaluation was designed following general systemic principles, with a set of indicators including: usability, openness, maturity, interoperability, etc. The identification of the evaluation criteria and the analysis of the results have been approached from several perspectives: literature survey and thorough desktop research; revision and fine-tuning of the initial BB set and evaluation methodology, and an online questionnaire designed for gathering feedback by the relevant project partners: | |

| − | + | * Member States (MSs) | |

| − | + | * WP4 partners - Pilots ([[Doing Business Abroad Pilot|Doing Business Abroad - DBA]], [[Moving Abroad Pilot|Moving Abroad - MA]], and [[Studying Abroad Pilot|Studying Abroad - SA]]) | |

| − | * | + | * WP5 partners - for specific components |

| − | * Pilot | ||

| − | * | ||

| − | + | To revise and fine-tune the evaluation methodology developed in the first phase, as well as the set of BBs selected for assessment as a result, several meetings and consultations were held with the aforementioned project partners. This led to a narrower set of relevant BBs consisting of a subset of the ones assessed in the first phase, and news ones resulting from the piloting needs and implementation. To capture these specificities, a wide set of questions from the survey capture all aspects important for the decisions made and for future reuse. Several iterations over the initial set of questions were performed, determining the relevance according to the roles of the project partners that provided feedback. Finally, a process of feedback coordination was also determined for the pilot leaders, who gathered additional information by the pilot partners’ implementation of some of the BBs (or important aspects of them). | |

| − | |||

| − | + | It is important to note that the division of partners according to their role in the project was done due to the difference in the set of BBs that they had experience with. This implies that not each partner evaluated the whole set of BBs, but only the BBs relevant for their practice and research in the DE4A project. | |

| − | + | The final set of relevant BBs per partner type is given in Table 1, together with statistics on the number of assessments obtained for each BB. | |

| − | + | {| class="wikitable" | |

| + | |+Table 1 List of building blocks evaluated in the second phase, per relevant partner | ||

| + | |'''#''' | ||

| + | |'''Building Block''' | ||

| + | |'''# of assessments on the BB''' | ||

| + | |'''Relevant partner(s)''' | ||

| + | |- | ||

| + | | | ||

| + | |'''Common Components''' | ||

| + | | | ||

| + | | | ||

| + | |- | ||

| + | | '''1''' | ||

| + | |eDelivery (data exchange) | ||

| + | |3 | ||

| + | |WP5/MSs | ||

| + | |- | ||

| + | | '''2''' | ||

| + | |SMP/SML | ||

| + | |2 | ||

| + | |MSs via pilots | ||

| + | |- | ||

| + | | '''3''' | ||

| + | |DE4A Connector | ||

| + | |2 | ||

| + | |MSs via pilots | ||

| + | |- | ||

| + | | '''4''' | ||

| + | |DE4A Playground | ||

| + | |6 | ||

| + | |DBA/SA/MA | ||

| + | |- | ||

| + | | | ||

| + | |'''Semantic''' | ||

| + | | | ||

| + | | | ||

| + | |- | ||

| + | | '''5''' | ||

| + | |Information Exchange Model | ||

| + | |7 | ||

| + | |DBA/SA/MA/WP5 | ||

| + | |- | ||

| + | | '''6''' | ||

| + | |Canonical Data Models | ||

| + | |6 | ||

| + | |DBA/SA/MA DE and DO pilot partners | ||

| + | |- | ||

| + | | '''7''' | ||

| + | |ESL (implemented as part of SMP/SML) | ||

| + | |1 | ||

| + | |WP5 | ||

| + | |- | ||

| + | | '''8''' | ||

| + | |IAL | ||

| + | |1 | ||

| + | |WP5 | ||

| + | |- | ||

| + | | '''9''' | ||

| + | |MOR | ||

| + | |1 | ||

| + | |MA pilot partners | ||

| + | |- | ||

| + | | | ||

| + | |'''eID/PoR''' | ||

| + | | | ||

| + | | | ||

| + | |- | ||

| + | | '''10''' | ||

| + | |SEMPER | ||

| + | |1 | ||

| + | |DBA | ||

| + | |- | ||

| + | | | ||

| + | |'''VC Pattern''' | ||

| + | | | ||

| + | | | ||

| + | |- | ||

| + | | '''11''' | ||

| + | |SSI Authority agent | ||

| + | |4 | ||

| + | |SA | ||

| + | |- | ||

| + | | '''12''' | ||

| + | |SSI User agent (mobile) | ||

| + | |4 | ||

| + | |SA | ||

| + | |- | ||

| + | | '''13''' | ||

| + | |EBSI-ESSIF (CEF Blockchain) | ||

| + | |1 | ||

| + | |WP5 (T5.4) | ||

| + | |} | ||

| − | + | == Questionnaire design == | |

| + | This questionnaire aims to analyze the extent and the ways of employment of a list of BBs, whichwere cataloged during the first assessment phase of this task. Through a number of assessment categories and indicators assigned to them, the questionnaire supports a methodology that allows us to qualify and quantify the applicability, functionality, maturity and potential for reusability of each of the BBs, especially in view of the DE4A project. | ||

| − | + | The questionnaire contains 9 sections, i.e. categories, inquiring on: | |

| − | * | + | * Inventory of BBs and functionalities; |

| + | * Interoperability; | ||

| + | * Maturity; | ||

| + | * Openness; | ||

| + | * Ease of implementation; | ||

| + | * Meeting pilot requirements; | ||

| + | * Performance (Non-functional requirements); | ||

| + | * Patterns; and | ||

| + | * Trust, Identity, Security, Privacy, Protection. | ||

| − | + | The questionnaire was distributed among the relevant (aforementioned) partners, and the feedback has been obtained in the period 28.11.2022 - 12.12.2022. Based on the insights from the provided feedback, we are able to also extract valuable lessons learned through the implementation practices, as well as recommendations for future reuse of the analyzed BBs. | |

| − | |||

| − | + | '''''Note:''''' ''The BB assessment includes the BBs used in both iterations of the pilots. It also includes BBs used in the playground and the mocked DE/DO.'' | |

| − | ==== | + | == Results and Analysis == |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | == Data and preprocessing == | |

| − | + | After the data gathering period, a total of 10 surveys were received with valid input, representing full coverage of the BBs by foreseen parties (See Table 2). This provides sufficient statistical significance to proceed with the analysis of the results and finalize the BB assessment. | |

| − | + | {| class="wikitable" | |

| − | + | |+Table 2.Distribution of received valid feedback per partner type | |

| − | + | |'''Partner type''' | |

| − | + | |'''# of assessments done''' | |

| − | + | |- | |

| − | + | |1 (WP5 representative) | |

| − | + | |2 | |

| − | + | |- | |

| − | + | |2 (DBA pilot representative) | |

| − | + | |1 | |

| − | + | |- | |

| − | + | |3 (SA pilot representative) | |

| − | + | |4 | |

| − | + | |- | |

| − | + | |4 (MA pilot representative) | |

| − | + | |1 | |

| − | + | |- | |

| − | + | |5 (MS representative) | |

| − | + | |2 | |

| − | + | |- | |

| − | + | |Valid | |

| + | |10 | ||

| + | |} | ||

| − | == | + | == Data analysis == |

| − | + | In this section, we present the analysis of the obtained data and put the results in both the DE4A and the wider context relevant for reusing the analyzed BBs. We then provide comparative analysis between the two evaluation phases of the BBs carried out in the DE4A project. | |

| − | + | === Inventory of BBs and functionalities === | |

| + | In this part of the survey, we inquired on the new functionalities and requirements defined for the BBs during the project life-time. | ||

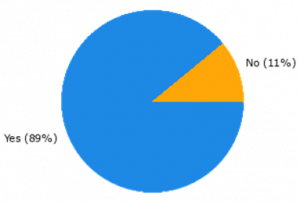

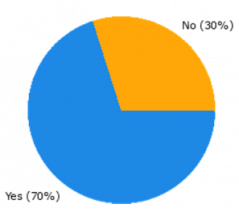

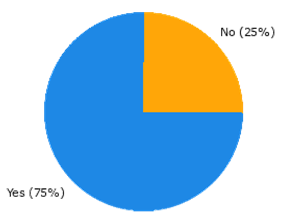

| − | + | In 9 of the 10 cases, there were new functionalities defined for one or more of the implemented BBs, and in 7 of the 10 cases, new requirements as well. This is shown in Figure 1a) and b), respectively. | |

| − | + | Of the functionalities, most noticeable were: | |

| − | + | * Iteration 2: definition of notification request and response regarding the Subscription and Notification (S&N) pattern; | |

| − | + | * Evidence request, DE/DO mocks; | |

| + | * Average grade element was added to the diploma scheme, due to requirements at the Universitat Jaume I (UJI) to rank applicants; | ||

| + | * Automatic confirmation of messages; | ||

| + | * Canonical data models: additional information to generate other types of evidence; | ||

| + | * Capacity to differentiate between "environments" (mock, preproduction and piloting) in the information returned by the IAL, since in the second iteration we had just one playground for all the environments; and | ||

| + | * Deregistration, multi evidence. | ||

| − | + | [[File:New functionalities.png|thumb|alt=|none|Figure 1. a) New functionalities]] | |

| − | + | [[File:New requirements.png|thumb|239x239px|alt=|none|Figure 1. b) New requirements for the existing BBs defined in DE4A lifetime]] | |

| − | |||

| − | + | Of the new requirements[1], the following were noted: | |

| + | * Those necessary to define and implement the above functionalities; | ||

| + | * SSI authority agent and SSI mobile agent were updated to simplify the [[Use Case "Diploma/Certs/Studies/Professional Recognition" (SA UC3)|SA UC3 ("Diploma/Certs/Studies/Professional Recognition")]] service; | ||

| + | * Subscription and Notification pattern, Evidence Request, DE/DO mocks (see project’s wiki solution architecture iteration 2, for requirements regarding Subscription and Notification); and | ||

| + | * Deregistration. | ||

| + | In addition to the new functionalities, we also inquired about the redundant ones, those that were already defined, but found unusable in the context of DE4A. Thus, in 4 of the (10) cases there were redundant functionalities found for the implementation of the pilot. | ||

| − | + | Despite the existing BBs that were on the list for evaluation, 4 of the partners also pointed out the following additional BBs or components that were employed in the pilots: | |

| − | + | * Logs and error messages (in the MA-pilot); | |

| − | + | * IAL / IDK (used by the Member States); and | |

| + | * eIDAS (used by the SA pilot and the MSs). | ||

| − | + | With their use, the following additional functionalities were provided: | |

| − | |||

| − | [[ | + | * Cross-border identification of students; |

| + | * Clarity and understanding; and | ||

| + | * Authentication and lookup routing information. | ||

| + | |||

| + | For the additional BBs, one new functionality was also defined during piloting: Common Errors. | ||

| + | |||

| + | Finally, the survey also inquired on the possibility of completely new BBs being defined during the lifespan of the project. According to the partners’ feedback, in 4 of the 10 cases such BBs were also defined, all of which were also regarded as '''reusable''' by other projects and in other cases as well, such as: | ||

| + | |||

| + | * For any SSI or verifiable credential like pattern; | ||

| + | * MOR semantics in EBSI and SDGR; and | ||

| + | * By IAL: for lookup routing information. | ||

| + | |||

| + | === BB Interoperability === | ||

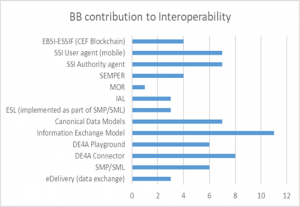

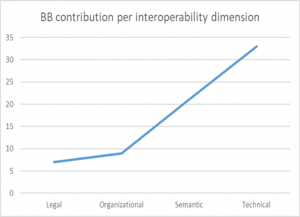

| + | EIF/EIRA defines 4 dimensions with respect to interoperability: Legal, Organizational, Semantic and Technical. Thus, in addition to analyzing the contribution of each BB to interoperability (as defined by EIF/EIRA), we also delved into more granular inquiry on how each of the interoperability dimensions was addressed in the DE4A project (with the implementation of the given set of BBs). This was done both for the cases of the current implementation of the BBs, as well as in view of the potential of each BB to contribute to (the dimensions of) interoperability in contexts other than DE4A. | ||

| + | |||

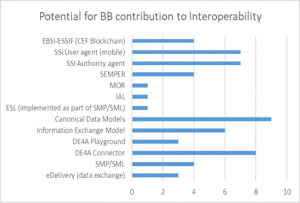

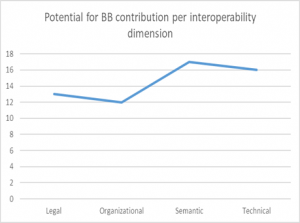

| + | Figure 2a) shows the contribution of each BB to interoperability in the context of DE4A, while Figure 2b) granulizes this contribution per each interoperability dimension. | ||

| + | |||

| + | Furthermore, Figure 3a) provides insight into the potential of each BB to contribute to interoperability in contexts other than DE4A, while Figure 3b) depicts the contribution of the analyzed BBs per each interoperability dimension, in general. It is important to note that this latter analysis (of the BBs potential) also takes into account the additional functionalities of the BBs defined during DE4A lifespan, through their piloting, and with the updated set of requirements. Thus, these figures also provide a proof of the DE4A contribution in the improvement of BB potential to respond to a wider set of interoperability requirements along each of the four dimensions: Legal, Organization, Semantic and Technical. | ||

| + | |||

| + | [[File:Contribution of each BB.png|thumb|alt=|none|Figure 2. a) Contribution of each BB to interoperability in the context of DE4A]] | ||

| + | [[File:Contribution per interoperability dimension..png|none|thumb|Figure 2. b) Contribution per interoperability dimension]] | ||

| + | [[File:Potential for contribution of each BB.png|none|thumb|Figure 3. a) Potential for contribution of each BB to interoperability; b) Potential for contribution per interoperability dimension]] | ||

| + | [[File:Potential for contribution per interoperability dimension.png|none|thumb|Figure 3. b) Potential for contribution per interoperability dimension.]]Finally, as part of the analysis of BB interoperability, the survey asked the relevant partners to indicate if a BB could help enable other technologies or standards. In that sense, the DE4A-VC pattern makes use of a Wallet, which could be an enabler for the EUDI Wallet. Moreover, it was also denoted as having the potential for reuse in order to enable take up of EBSI. Furthermore, the DBA pilot makes use of SEMPER, which could facilitate the adoption and spreading of SEMPER as a standard for authentication and powers/mandates. Finally, the CompanyEvidence model could be used as evidence standard with implementation of SDG OOTS. | ||

| + | |||

| + | === BB Maturity === | ||

| + | In the [[Building Blocks|first phase of the BB assessment]], domain experts provided qualitative assessment of the maturity of the BB along the following dimensions: | ||

| + | |||

| + | 1. Technical | ||

| + | |||

| + | 2. Administrative | ||

| + | |||

| + | 3. Operational | ||

| + | |||

| + | The aim of this initial feedback was to provide the grounds for a comparative analysis over the same dimensions, after the current (second) phase of the BB assessment (i.e. check if anything changed meanwhile). | ||

| + | |||

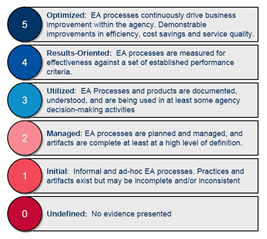

| + | The following scale was used to provide input on the level of maturity of the given set of BBs by each relevant partner: 0 - Discarded; 1 - Useful, 2 - Acceptable, 3 - Recommended). This is the identical scale employed for the assessment of the BBs in the first phase. As a reference, the semantics behind these scores is also again given here on Figure 4 below. | ||

| + | [[File:Grading semantics of the evaluation scores used in the first and the second phase.png|none|thumb|Figure 4. Grading semantics of the evaluation scores used in the first and the second phase]] | ||

| + | However, there is one important aspect on which the BB assessment in this phase differs from the previous. Whereas previously we obtained a single evaluation score to determine the general maturity of a BB, in the current iteration, the 13 BBs were evaluated for each type of maturity (Technical, Administrative, Operational). This is actually fine-tuning of the initial methodology, which appeared insufficiently granular to provide correct output. Due to this lack of granularity and the inability to capture some contextual specificities, in the first phase the SEMPER building block was denoted as '''Immature''', but was still '''Recommended''' for use in the DE4A. | ||

| + | |||

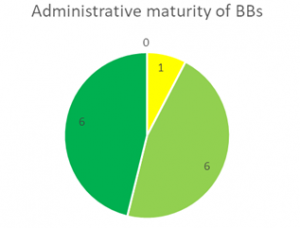

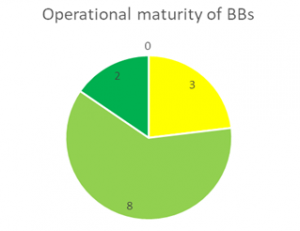

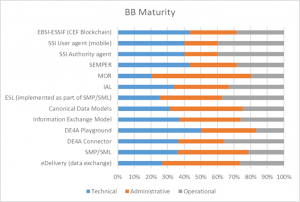

| + | As Figures 5. a,b and c below show, none of the 13 BBs analyzed in this phase was '''Discarded''' for future implementation and reuse. The highest level of maturity was achieved Administrative context, where almost half of the BBs are considered to be completely aligned with current EU policies and only one BB (IAL) is denoted as unstable. From a technical maturity aspect, most of the BBs are implemented and running, while 2 (IAL and MOR) are still unstable and under development. Similar is the case with operational maturity, where 3 BBs are deemed as unstable: SMP/SML[1] , IAL and MOR. Hence, it is reasonable to pinpoint IAL as the least mature BB, which is however not to be discarded for reuse and re-uptake by other projects. | ||

| + | [[File:Technical.png|none|thumb|Figure 5. a) Technical Maturity of BB]] | ||

| + | [[File:Administrative.png|none|thumb|Figure 5. b) Administrative Maturity of BB]] | ||

| + | [[File:Operational.png|none|thumb|Figure 5. c)Operational Maturity of BB]] | ||

| + | |||

| + | |||

| + | Figure 6 provides insight into the general state of maturity of each of the BBs, showing that each of the 13 building blocks integrates all aspects of maturity in its overall maturity. Furthermore, the figure also makes it evident that some of the BBs are more advanced in one aspect and less in others. For instance, the DE4A Playground demonstrates higher technical maturity, whereas its operational maturity has been reached to a lesser extent. Similarly, MOR’s maturity is mainly in administrative sense, and to a lesser extent in the technical and operational sense. | ||

| + | [[File:General state of maturity of each BBs.png|none|thumb|Figure 6. General state of maturity of each BBs]] | ||

| + | |||

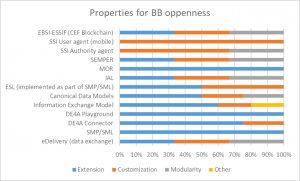

| + | === BB Openness === | ||

| + | In this section, we analyzed the openness of each of the 13 BBs along several dimensions, i.e. properties: Extensibility, Customizability and Modularity. As Figure 7 shows, the most prevalent of all is ''Extensibility'', present in most of the BB except the SSI User and Authority agent. Most of the BBs lend themselves to Customization, and more than half are also modular. | ||

| + | [[File:Properties enabling building block openness.png|none|thumb|Figure 7. Properties enabling building block openness]] | ||

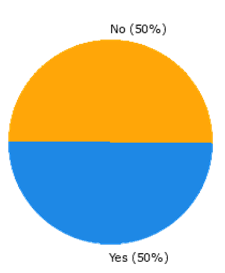

| + | It is important to note, however, that 75% of the partners stated that the implementation, i.e. the use of some of the BBs is either technology, platform or solution dependent. For instance, IEM is DE4A specific, while CompanyData model is usable beyond DE4A. Furthermore, many components depend on a one-man company, introducing risks in terms of support and maintenance. Similarly, EBSI and federated services were denoted as solution/platform dependent, especially in the context of mixed environments . | ||

| + | |||

| + | Finally, half of the BBs were also marked as sector-specific. Thus, some are only relevant for public administrations, others for certain education environments (e.g., canonical data models in the SA pilot are specific to higher education), and some - for the business domain. | ||

| + | {| class="wikitable" | ||

| + | |+Table 3. BB usability traits in the context of DE4A | ||

| + | |'''#''' | ||

| + | |'''Building Block''' | ||

| + | |'''Ease of deployment''' | ||

| + | |'''Ease of configuration''' | ||

| + | |'''Ease of integration with other BBs/existing SW''' | ||

| + | |'''Barriers for implementation''' | ||

| + | |- | ||

| + | | '''1''' | ||

| + | |'''eDelivery (data exchange)''' | ||

| + | |It is embedded into the Connector; | ||

| + | |||

| + | Easy | ||

| + | |3/5; | ||

| + | |||

| + | Tricky (certificates) | ||

| + | |3/5; | ||

| + | |||

| + | Easy | ||

| + | |Certificates; | ||

| + | |||

| + | Support by one-man company | ||

| + | |- | ||

| + | | '''2''' | ||

| + | |'''SMP/SML''' | ||

| + | |No | ||

| + | |||

| + | Easy | ||

| + | |No; | ||

| + | |||

| + | Difficult | ||

| + | |No | ||

| + | |||

| + | Easy | ||

| + | |Certificates; | ||

| + | |||

| + | Support by one-man company | ||

| + | |- | ||

| + | | '''3''' | ||

| + | |'''DE4A Connector''' | ||

| + | |No | ||

| + | |||

| + | Easy | ||

| + | |No; | ||

| + | |||

| + | Difficult | ||

| + | |Fairly easy integration with data evaluator | ||

| + | |No | ||

| + | |- | ||

| + | | '''4''' | ||

| + | |'''DE4A Playground''' | ||

| + | |N/A | ||

| + | |N/A | ||

| + | |Fairly easy integration with data evaluator | ||

| + | |No | ||

| + | |- | ||

| + | | '''5''' | ||

| + | |'''Information Exchange Model''' | ||

| + | |Yes | ||

| + | |4/5; | ||

| + | |||

| + | Yes | ||

| + | |4/5, yes, Transparent to data evaluators | ||

| + | |No | ||

| + | |- | ||

| + | | '''6''' | ||

| + | |'''Canonical Data Models''' | ||

| + | |Fairly easy | ||

| + | |yes | ||

| + | |CompanyEvidence was easy to use with integration with BusReg; | ||

| + | |||

| + | Fairly easy integration with data evaluator | ||

| + | |No | ||

| + | |- | ||

| + | | '''7''' | ||

| + | |'''ESL (implemented as part of SMP/SML)''' | ||

| + | |5/5 | ||

| + | |3/5 | ||

| + | |2/5 | ||

| + | |No | ||

| + | |- | ||

| + | | '''8''' | ||

| + | |'''IAL''' | ||

| + | |5/5 | ||

| + | |2/5 | ||

| + | |2/5 | ||

| + | |Yes. Business cards from TOOP were reused, whose data model did not completely meet DE4A needs for the IAL functionality. However, a working solution was provided | ||

| + | |- | ||

| + | | '''9''' | ||

| + | |'''MOR''' | ||

| + | |N/A | ||

| + | |N/A | ||

| + | |N/A | ||

| + | |N/A | ||

| + | |- | ||

| + | | '''10''' | ||

| + | |'''SEMPER''' | ||

| + | |Normal | ||

| + | |Normal | ||

| + | |Normal | ||

| + | |No | ||

| + | |- | ||

| + | | '''11''' | ||

| + | |'''SSI Authority agent''' | ||

| + | |Yes | ||

| + | |Yes | ||

| + | |Yes | ||

| + | |No | ||

| + | |- | ||

| + | | '''12''' | ||

| + | |'''SSI User agent (mobile)''' | ||

| + | |Yes | ||

| + | |yes | ||

| + | |Yes | ||

| + | |No | ||

| + | |- | ||

| + | | '''13''' | ||

| + | |'''EBSI-ESSIF (CEF Blockchain)''' | ||

| + | |Normal | ||

| + | |Normal | ||

| + | |Normal | ||

| + | |No | ||

| + | |} | ||

| + | As a result of these experiences, practical recommendations for use and implementation of the BBs are also provided (see Table 4 below), together with pointers to some of the successful uses in the context of DE4A. | ||

| + | {| class="wikitable" | ||

| + | |+Table 4. Recommendations for use and implementation of the BBs | ||

| + | |'''#''' | ||

| + | |'''Building Block''' | ||

| + | |'''Practical recommendation for use''' | ||

| + | |'''Used in:''' | ||

| + | |- | ||

| + | | '''1''' | ||

| + | |'''eDelivery (data exchange)''' | ||

| + | |To make certificate management easier | ||

| + | |Used by multiple Interaction Patterns (IM, USI, S&N, L, DReg?); | ||

| + | |||

| + | Can be considered common infrastructure | ||

| + | |- | ||

| + | |''' 2''' | ||

| + | |'''SMP/SML''' | ||

| + | |To make certificate management easier | ||

| + | |Part of eDelivery (idem) | ||

| + | |- | ||

| + | | '''3''' | ||

| + | |'''DE4A Connector''' | ||

| + | |The concept of a Connector with an integrated AS4 gateway allowed for easier integration of DEs and DOs in the USI pattern. Decoupling the exchange and business layers allows for abstraction and adds flexibility to the exchange model. The DE4A Connector reference implementation helped MS to integrate their data evaluators and data owners and enable connectivity between the states more easily; | ||

| + | |||

| + | Make certificate management easier. | ||

| + | |Used for easier integration of data evaluators and data owners | ||

| + | |- | ||

| + | | '''4''' | ||

| + | |'''DE4A Playground''' | ||

| + | |The DE4A playground with mock DE, mock DO test connectors and shared test SMP proved successful for validating national connectors and SMP installations and easier DE and DO integration. | ||

| + | |Used for easier integration of DEs and DOs | ||

| + | |- | ||

| + | | '''5''' | ||

| + | |'''Information Exchange Model''' | ||

| + | |N/A | ||

| + | |To fully understand the XSDs; Transparent to pilots | ||

| + | |- | ||

| + | | '''6''' | ||

| + | |'''Canonical Data Models''' | ||

| + | |Assess fit for use in SDG OOTS; | ||

| + | |||

| + | Extend with additional attributes/data; | ||

| + | |||

| + | It was difficult to find a common denominator of higher education evidence from the three MS for applications to higher education and applications for study grants (some data required by one MS might not be obtainable from another MS). This will become even more difficult when doing the same among all MS. Many MS also have difficulties to provide the evidence required for certain procedures, e.g. non-academic evidence for the applications for study grants that can contain privacy sensitive data. The pilot suggested to the DE4A Semantic Interoperability Solutions (WP3) the Europass data model as the basis for the higher education diploma scheme in order to be able to use the same schema for both USI and VC pattern (and thus between SDG OOTS and revised eIDAS regulation). | ||

| + | |Used for canonical evidence exchange | ||

| + | |- | ||

| + | | '''7''' | ||

| + | |'''ESL (implemented as part of SMP/SML)''' | ||

| + | |Documented in the proper guidelines[1] | ||

| + | |Extension of SMP/SML, i.e. business cards | ||

| + | |- | ||

| + | | '''8''' | ||

| + | |'''IAL''' | ||

| + | |Documented in the proper guidelines | ||

| + | |Conceptually part of IDK, central component providing routing information | ||

| + | |} | ||

| + | |||

| + | === Meeting pilot requirements === | ||

| + | After BBs piloting implementation, we asked pilot partners to also document their experience in terms of BBs meeting the initial requirements for the particular piloting context. These experiences are listed in Table 5, which shows that most of the piloting requirements were met, although for some of the BBs new functionalities were provided (as discussed in the first subsection of this analysis) in order to achieve the piloting objectives. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |+Table 5. Inventory of piloting requirements related to each building block | ||

| + | |'''#''' | ||

| + | |'''Building Block''' | ||

| + | |'''Piloting requirements that were met''' | ||

| + | |'''Requirements that were not met''' | ||

| + | |- | ||

| + | | '''1''' | ||

| + | |'''eDelivery (data exchange)''' | ||

| + | |Message exchange; | ||

| + | |||

| + | All | ||

| + | |None | ||

| + | |- | ||

| + | | '''2''' | ||

| + | |'''SMP/SML''' | ||

| + | |All | ||

| + | |None | ||

| + | |- | ||

| + | | '''3''' | ||

| + | |'''DE4A Connector''' | ||

| + | |Met (4.75 on a 1-5 scale; see D4.3); All | ||

| + | |None | ||

| + | |- | ||

| + | | '''4''' | ||

| + | |'''DE4A Playground''' | ||

| + | |N/A | ||

| + | |N/A | ||

| + | |- | ||

| + | | '''5''' | ||

| + | |'''Information Exchange Model''' | ||

| + | |Include the information to request and send evidence, even multiple evidence; | ||

| + | |||

| + | All; | ||

| + | |||

| + | Met (4.5 on a 1-5 scale; see D4.3) | ||

| + | |None | ||

| + | |- | ||

| + | | '''6''' | ||

| + | |'''Canonical Data Models''' | ||

| + | |All; | ||

| + | |||

| + | Met (4.5 on a 1-5 scale; see D4.3) | ||

| + | |None; | ||

| + | |||

| + | Missing element was added to the diploma scheme for the final phase | ||

| + | |- | ||

| + | | '''7''' | ||

| + | |'''ESL (implemented as part of SMP/SML)''' | ||

| + | |Identify the DO’s evidence service | ||

| + | |None | ||

| + | |- | ||

| + | | '''8''' | ||

| + | |'''IAL''' | ||

| + | |Identify the evidence DO provider | ||

| + | |The data model of the IAL does not support all the information on Administrative Territorial Units designed by WP3 | ||

| + | |- | ||

| + | | '''9''' | ||

| + | |'''MOR''' | ||

| + | |N/A | ||

| + | |N/A | ||

| + | |- | ||

| + | | '''10''' | ||

| + | |'''SEMPER''' | ||

| + | |N/A | ||

| + | |N/A | ||

| + | |- | ||

| + | | '''11''' | ||

| + | |'''SSI Authority agent''' | ||

| + | |Met (4 on a 1-5 scale; see D4.3) | ||

| + | |None | ||

| + | |- | ||

| + | | '''12''' | ||

| + | |'''SSI User agent (mobile)''' | ||

| + | |Met (4.5 on a 1-5 scale; see D4.3) | ||

| + | |None | ||

| + | |- | ||

| + | | '''13''' | ||

| + | |'''EBSI-ESSIF (CEF Blockchain)''' | ||

| + | |N/A | ||

| + | |N/A | ||

| + | |} | ||

| + | As the barriers to meeting piloting requirements and smooth implementation may be of different nature, we have analyzed them in a finer granularity. This systematization of barriers has been rationed and defined in WP1, and can be found in Deliverable 1.8 – “Updated legal, technical, cultural and managerial risks and barriers”. Table 6, provides the partners experiences in terms of implementation barriers, describing them in the context in which they were encountered. | ||

| + | {| class="wikitable" | ||

| + | |+Table 6. Barriers to BB implementation | ||

| + | |'''Type of barrier''' | ||

| + | |'''Description of barrier''' | ||

| + | |- | ||

| + | |'''Legal''' | ||

| + | |All MSs need to accept DLT; | ||

| + | |||

| + | How to authorize a DE to request a canonical evidence type about a specific subject. | ||

| + | |- | ||

| + | |'''Organizational''' | ||

| + | |Hire/Engage internal staff; | ||

| + | |||

| + | The collection of signed information from partners to create the first PKI with Telesec, which took a lot of time and was very burdensome; | ||

| + | |||

| + | Manual trust management, horizontal trust model | ||

| + | |- | ||

| + | |'''Technical''' | ||

| + | |Invest in EBSI infrastructure; | ||

| + | |||

| + | Allow federated until 2027; | ||

| + | |||

| + | The need of knowing different external technologies and protocols in a very short time, such as eDelivery; maintainability | ||

| + | |- | ||

| + | |'''Semantic''' | ||

| + | |Terms get antiquated need synonym hoover text; No major issues; | ||

| + | |||

| + | Lack of canonical models at semantic level, lack of official pairings from local models to canonical models so translation can be done for each pair of MS | ||

| + | |- | ||

| + | |'''Business''' | ||

| + | |Allow for private service providers to improve Usability UI; | ||

| + | |||

| + | No major issues related to BBs. | ||

| + | |- | ||

| + | |'''Political''' | ||

| + | |AI and ML in reuse of the Data; | ||

| + | |||

| + | The obligation of using RegRep.; | ||

| + | |||

| + | Following global standards | ||

| + | |- | ||

| + | |'''Human factor''' | ||

| + | |Too little resources to SW dev; | ||

| + | |||

| + | The availability of the personnel assigned to DE4A, which more often than not has not been the expected one. | ||

| + | |||

| + | Additionally, the withdrawal of some partners (such as eGovlab); usability. | ||

| + | |} | ||

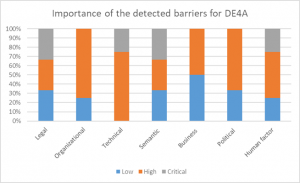

| + | Figure 8 further details the level of criticality of the listed barrier in the context of DE4A. | ||

| + | [[File:Level of criticality of the barriers from Table 6 for DE4A.png|none|thumb|Figure 8. Level of criticality of the barriers from Table 6 for DE4A]] | ||

| + | All types of barriers have had elements of high criticality to be addressed, although the technical barriers had the highest criticality during implementation. These were mainly related to the need of knowing different external technologies and protocols in a very short time, such as in the case of eDelivery, but also to maintainability and sustainability of certain regulatory requirements related to technical implementation. High accent for all types of barriers was put on the time-frames needed to meet certain requirements, which were often in discord with the technical readiness of the current infrastructure and the human ability to respond to the required changes. Finally, the amount of available resources was present in all barriers - in terms of scarce human resources, technical expertise, administrative procedures, legislative readiness, infrastructure and staff availability, etc. | ||

| + | |||

| + | === Performance and Non-Functional Requirements === | ||

| + | The same systematization of the types of barriers investigated above is also ascribed to the various aspects (dimensions) of the building blocks through which we can analyze the BBs performance at a more granular level. | ||

| + | |||

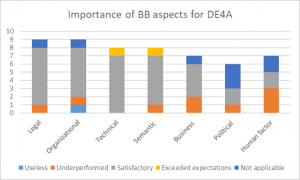

| + | In that sense, Figure 9 shows the importance of each of these aspects (legal, organizational, technical, semantic, business, political and human factors) in the context of DE4A. In other words, the responding partners articulated the aspects that appeared as most important in their experience with piloting and implementation. The assessed BBs have mainly performed satisfactory across all dimensions, and even exceeded expectations on some technical and semantic traits. However, there is a (small) subset of traits across most dimensions on which the BBs also underperformed. These mainly refer to the barriers and the unmet requirements discussed earlier in this analysis. Some of them are also enlisted later in this section. | ||

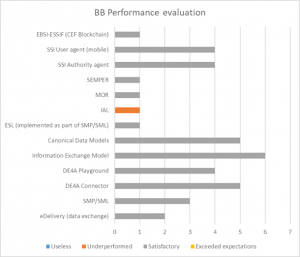

| + | [[File:Importance of each BB aspect (dimension) for DE4A.png|none|thumb|Figure 9. Importance of each BB aspect (dimension) for DE4A]]In terms of overall performance of each building block in the context of DE4A, Figure 10 shows that the only BB who was claimed as '''Underperforming''' is IAL, which we also pin-pointed in the maturity analysis as well, in each of the Technical, Administrative and Operational aspects. | ||

| + | [[File:Overall performance of each building block in the context of DE4A.png|none|thumb|Figure 10. Overall performance of each building block in the context of DE4A]] | ||

| + | Although there are no specific non-functional requirements (NFRs) with respect to performance, availability and scalability, and no performance tests were performed for DE4A, the idea of this section is to get a perception of the partners’ experience with non-functional requirements in the context of DE4A. Table 1 provides an overview of the partners’ claims. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |+Table 7. Partners’ experience with non-functional requirements | ||

| + | |'''Type of NFR issue''' | ||

| + | |'''Encountered''' | ||

| + | |'''Foreseen''' | ||

| + | |- | ||

| + | |'''Performance''' | ||

| + | |Response times and uptime for some components; | ||

| + | |||

| + | |||

| + | Frequent issues with "external service outage" that would lower availability of services largely. | ||

| + | |Connectors and SMP/SML can become single points of problems if having performance issues in the future when many DEs and DOs join; | ||

| + | |||

| + | |||

| + | Hard to say as the number of users may be much higher in production; | ||

| + | |||

| + | |||

| + | Possible - the BB should be stress-tested by EU/MS before recommendation. | ||

| + | |- | ||

| + | |'''Availability''' | ||

| + | |Yes, due to software and configuration issues; | ||

| + | |||

| + | |||

| + | Several components were not available (regularly); | ||

| + | |||

| + | |||

| + | Yes, frequently, IP-changes led to connectivity issues; | ||

| + | |||

| + | |||

| + | Yes, some services were unstable, but during piloting the situation improved. | ||

| + | |Yes, including external services | ||

| + | |- | ||

| + | |'''Scalability''' | ||

| + | |Yes. Components not designed for high-availability deployments, nor for flexible escalation. | ||

| + | |Depending on use of components, support and development may depend on a one-man company. | ||

| + | |} | ||

| + | |||

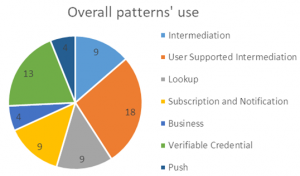

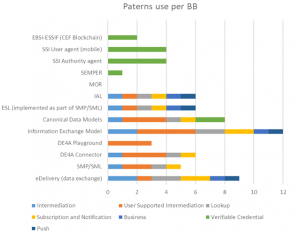

| + | === Patterns === | ||

| + | Some of the building blocks are used only for specific interaction patterns, while others span multiple patterns. In this section, we analyze the correlation between DE4A patterns and each of the 13 BBs, per pilot. | ||

| + | As Figure 11 <span lang="EN">In terms of overall performance of each building block in | ||

| + | the context of DE4A, </span> shows, the most employed is the User Supported Intermediation, closely followed by Verifiable Credential, whereas the least used are the Push and Business patterns. However, this does not imply that these patterns are less useful, but only that they were exploited to a lesser extent in the DE4A depending on the pilots’ needs. For more documentation and guidelines on when and how each of these patterns can be used and implemented, please see the relevant pilot wiki. | ||

| + | |||

| + | S&N and LKP are used in DBA but should also be applicable to non-business context | ||

| + | |||

| + | [[File:Overall use of DE4A patterns.png|none|thumb|Figure 11. Overall use of DE4A patterns]] | ||

| + | |||

| + | |||

| + | The use of each of these patterns per building block is shown in Figure 12. | ||

| + | [[File:Use of each pattern by each building block.png|none|thumb|Figure 12. Use of each pattern by each building block]] | ||

| + | |||

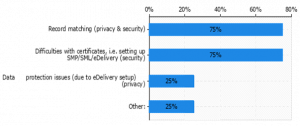

| + | === Trust, Identity, Security, Privacy, Protection === | ||

| + | There are various trust models in use in DE4A, depending on the interaction patterns used. All those patterns come with specifics related to authentication/establishing identity, security controls and possible privacy issues. This applies to both data at rest/in transfer. Figure 13a) shows the nature and the amount of the specific security/privacy issues encountered during implementation. | ||

| + | [[File:Security and privacy issues encountered.png|none|thumb|Figure 13. a) Security and privacy issues encountered]] | ||

| + | [[File:No. of issues due to the BB use.png|none|thumb|Figure 13. b) # of issues due to the BB use]] | ||

| + | |||

| + | |||

| + | Most of the issues are related to security and are introduced as a result of record matching and difficulties with certificates. The small amount of privacy issues is related to data protection, and mainly refer to the usage of eDelivery and 4 corner model during piloting. | ||

| + | |||

| + | The issues stated as being introduced by the use of the BB (on Figure 13b)) mainly refer to availability and connectivity and are related to the use of certificates. However, these issues are not due to the BB nature or the lack of some functionality, but a result of the absence of improvements that were needed to meet the implementation requirements. Thus, this does not affect the reuse aspect of any of the assessed BBs. | ||

| + | |||

| + | In order to analyze in a greater depth, the security issues encountered, the survey inquired on the security mechanisms available to handle and address such issues in terms of confidentiality, integrity and availability, as well as the BB features used for that purpose. The cases in which concrete BB features were employed to address security issues are presented in the charts of Figure 14, a), b) and c). | ||

| + | [[File:BB features used to address .png|none|thumb|Figure 14. a) Confidentiality]] | ||

| + | [[File:Confidentiality.png|none|thumb|Figure 14. b) Integrity]] | ||

| + | [[File:Availability.png|none|thumb|Figure 14. c) Availability]] | ||

| + | |||

| + | |||

| + | More concretely, information confidentiality mechanisms used within the pilots were: eIDAS standard mechanisms, encryption and verification, passwords and security tokens. | ||

| + | |||

| + | Information integrity mechanisms used within the pilots were: hashing, access control and digital signatures. | ||

| + | |||

| + | Finally, information availability mechanisms used within the pilots were: cloud server deployment, redundancy, firewalls, and DDoS attacks' prevention. | ||

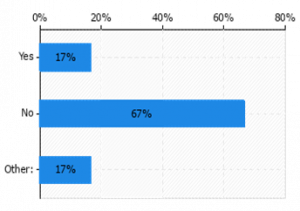

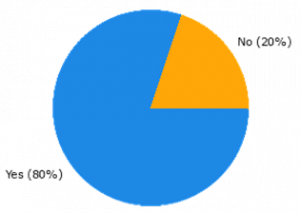

| + | |||

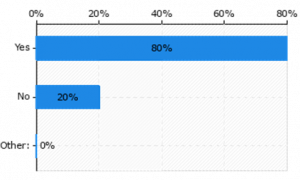

| + | In most (80%) of the cases, the security mechanisms were used to counter specific cyber threats (Figure 15a)). In half of these, the vulnerabilities to a cyber-attack were introduced by the employed BBs (or their features) (Figure 15b)). | ||

| + | [[File:Extent of employing security mechanisms to counter specific cyber-threats.png|none|thumb|Figure 15. a). Extent of employing security mechanisms to counter specific cyber-threats]] | ||

| + | [[File:Frequency of BBs introducing new vulnerabilities.png|none|thumb|Figure 15. b) Frequency of BBs introducing new vulnerabilities]] | ||

| + | Features claimed to introduce such vulnerabilities are some libraries, and only at a certain point of the piloting (such as log4j and the spring framework). However, these vulnerabilities were detected and fixed on time. Other vulnerabilities that were also met and addressed were DoS and increased risk of data hijacking. To address these vulnerabilities, adequate countermeasures have been employed, such as: access control, channel encryption, authentication and digital signatures. For example, all communications have been secured via the use of certificates, so that the communications are encrypted. In addition, the components of the playground have been deployed using redundancy. | ||

| + | |||

| + | = Discussion and comparative analysis = | ||

| + | In the first phase of the BB assessment, we used the Digital Services Model taxonomy to establish common terminology and framework, but also to analyze the needs and requirements for the deployment of the digital services in DE4A. The overall purpose of such an approach is enabling a continuity of the developed methodologies with the large-scale pilots. This taxonomy contained five different levels of granularity: | ||

| + | |||

| + | # Standards and Specifications; | ||

| + | # Common code or Components; | ||

| + | # Building Blocks; | ||

| + | # Core Service Platforms; | ||

| + | # Generic Services. | ||

| + | |||

| + | Most of the recommended BBs in the first phase were of the type ‘Building Block’ and ‘Standards and specifications’. However, this is also expected and is not an argument against maturity, as these are also the elements/components that are most flexible in terms of configurability and deployment and, thus, most subject to reuse by other projects. In order to provide arguments for the overall set of building blocks assessed for DE4A purposes, we will rely on the Enterprise Architecture Assessment Framework principles (shown in Figure 16), also presented in the first phase of BB assessment. | ||

| + | |||

| + | While the output of the EAAF is a maturity level assessment of the overall architecture, the phased approach in DE4A creates an intermediate feedback loop between the pilots and the Project Start Architecture, allowing for adaptable integration of the assessment methodology within the WP2 change management. Although for the evaluation of the overall PSA additional insights are needed, it is still possible to obtain some quantitative assessment for the solution architecture based on the set of employed BBs. However, it is important to note that this is not to be considered as the overall architecture framework maturity level, but only as an aspect of it that provides valid arguments for discussion on the overall maturity. For instance, such a value can serve as a reference point that can be compared upon a given KPI for the overall maturity, in case such a requirement exists. | ||

| + | |||

| + | In order to compile a single value for the current maturity level for a pilot solution architecture, the set of BB assessments and their recommendations shall be compared upon the baseline for the EAAF maturity levels given in Figure 16. In the context of DE4A, based on the maturity evaluations provided in the section on “BB Maturity”, it can be observed that most of the BBs and their functionalities, implementation details and operation in the context of DE4A have been documented, understood, and used in at least some agency of decision-making activities. However, from the “Performance and Non-Functional Requirements” Section, we see that not all have gone through an effectiveness evaluation against a set of established performance criteria. Thus, '''the level of maturity of the overall set of DE4A architecture BBs according to the EAAF is 3.''' | ||

| + | [[File:EAAF Maturity levels.png|none|thumb|Figure 16. EAAF Maturity levels]] | ||

| + | |||

| + | = Conclusion = | ||

| + | In this analysis, we presented the second phase of a 2-phased approached for building blocks assessment used in the DE4A project. The entire approach followed a concrete methodology designed in the initial stages of the project start architecture, and updated/fine-tuned during the piloting of the building blocks. The first assessment phase resulted in a list of BBs relevant and recommended for use in the DE4A based on three aspects of their maturity: Technical, Administrative and Operational. Both the definition and the fine-tuning of the methodology, as well as the updating of the list of relevant BBs was done in close collaboration with the relevant DE4A implementing and using the BBs: WP4 (pilots) partners, the Member States, and WP4 (components) partners. | ||

| + | |||

| + | During the second phase, 13 BBs were analyzed from a wider perspective, i.e. for their interoperability (across each EIF/EIRA dimension), maturity (across the three dimensions used in the first phase as well), openness (across three dimensions), usability (across three dimensions), meeting pilot requirements, performance and non-functional requirements (across 7 dimensions), patterns’ use, as well as for addressing trust, identity, security and privacy issues in the context of DE4A. Moreover, barriers for implementation were detected of various nature: technical, business, legal, organizational, political, semantic and human factor. Based on the insights from the obtained feedback, and the direct partners’ experiences, recommendations for future (re)use were also provided as part of the analysis. | ||

| + | |||

| + | On the one side, this effort complements the other architecture tasks carried out in the DE4A, supporting the design choices on the PSA. On the other side, it testifies of the effective internal collaboration among a variety of DE4A partners. Finally, the approach serves as a documented effort of reusable practices and solutions provided through the DE4A partners’ experiences. | ||

Latest revision as of 10:48, 3 February 2023

Building Block Assessment - 2nd Phase

The second phase of the assessment of the architecture building blocks (BBs) used in the DE4A project comes after the cataloging of an initial set of relevant BBs for the Project Start Architecture (PSA). While in the previous phase the BBs were assessed for their technical, administrative and operational maturity, in this phase a more constrained set of BBs actually implemented by the pilots was evaluated from a wider perspective.

Methodology

The methodology for evaluation was designed following general systemic principles, with a set of indicators including: usability, openness, maturity, interoperability, etc. The identification of the evaluation criteria and the analysis of the results have been approached from several perspectives: literature survey and thorough desktop research; revision and fine-tuning of the initial BB set and evaluation methodology, and an online questionnaire designed for gathering feedback by the relevant project partners:

- Member States (MSs)

- WP4 partners - Pilots (Doing Business Abroad - DBA, Moving Abroad - MA, and Studying Abroad - SA)

- WP5 partners - for specific components

To revise and fine-tune the evaluation methodology developed in the first phase, as well as the set of BBs selected for assessment as a result, several meetings and consultations were held with the aforementioned project partners. This led to a narrower set of relevant BBs consisting of a subset of the ones assessed in the first phase, and news ones resulting from the piloting needs and implementation. To capture these specificities, a wide set of questions from the survey capture all aspects important for the decisions made and for future reuse. Several iterations over the initial set of questions were performed, determining the relevance according to the roles of the project partners that provided feedback. Finally, a process of feedback coordination was also determined for the pilot leaders, who gathered additional information by the pilot partners’ implementation of some of the BBs (or important aspects of them).

It is important to note that the division of partners according to their role in the project was done due to the difference in the set of BBs that they had experience with. This implies that not each partner evaluated the whole set of BBs, but only the BBs relevant for their practice and research in the DE4A project.

The final set of relevant BBs per partner type is given in Table 1, together with statistics on the number of assessments obtained for each BB.

| # | Building Block | # of assessments on the BB | Relevant partner(s) |

| Common Components | |||

| 1 | eDelivery (data exchange) | 3 | WP5/MSs |

| 2 | SMP/SML | 2 | MSs via pilots |

| 3 | DE4A Connector | 2 | MSs via pilots |

| 4 | DE4A Playground | 6 | DBA/SA/MA |

| Semantic | |||

| 5 | Information Exchange Model | 7 | DBA/SA/MA/WP5 |

| 6 | Canonical Data Models | 6 | DBA/SA/MA DE and DO pilot partners |

| 7 | ESL (implemented as part of SMP/SML) | 1 | WP5 |

| 8 | IAL | 1 | WP5 |

| 9 | MOR | 1 | MA pilot partners |

| eID/PoR | |||

| 10 | SEMPER | 1 | DBA |

| VC Pattern | |||

| 11 | SSI Authority agent | 4 | SA |

| 12 | SSI User agent (mobile) | 4 | SA |

| 13 | EBSI-ESSIF (CEF Blockchain) | 1 | WP5 (T5.4) |

Questionnaire design

This questionnaire aims to analyze the extent and the ways of employment of a list of BBs, whichwere cataloged during the first assessment phase of this task. Through a number of assessment categories and indicators assigned to them, the questionnaire supports a methodology that allows us to qualify and quantify the applicability, functionality, maturity and potential for reusability of each of the BBs, especially in view of the DE4A project.

The questionnaire contains 9 sections, i.e. categories, inquiring on:

- Inventory of BBs and functionalities;

- Interoperability;

- Maturity;

- Openness;

- Ease of implementation;

- Meeting pilot requirements;

- Performance (Non-functional requirements);

- Patterns; and

- Trust, Identity, Security, Privacy, Protection.

The questionnaire was distributed among the relevant (aforementioned) partners, and the feedback has been obtained in the period 28.11.2022 - 12.12.2022. Based on the insights from the provided feedback, we are able to also extract valuable lessons learned through the implementation practices, as well as recommendations for future reuse of the analyzed BBs.

Note: The BB assessment includes the BBs used in both iterations of the pilots. It also includes BBs used in the playground and the mocked DE/DO.

Results and Analysis

Data and preprocessing

After the data gathering period, a total of 10 surveys were received with valid input, representing full coverage of the BBs by foreseen parties (See Table 2). This provides sufficient statistical significance to proceed with the analysis of the results and finalize the BB assessment.

| Partner type | # of assessments done |

| 1 (WP5 representative) | 2 |

| 2 (DBA pilot representative) | 1 |

| 3 (SA pilot representative) | 4 |

| 4 (MA pilot representative) | 1 |

| 5 (MS representative) | 2 |

| Valid | 10 |

Data analysis

In this section, we present the analysis of the obtained data and put the results in both the DE4A and the wider context relevant for reusing the analyzed BBs. We then provide comparative analysis between the two evaluation phases of the BBs carried out in the DE4A project.

Inventory of BBs and functionalities

In this part of the survey, we inquired on the new functionalities and requirements defined for the BBs during the project life-time.

In 9 of the 10 cases, there were new functionalities defined for one or more of the implemented BBs, and in 7 of the 10 cases, new requirements as well. This is shown in Figure 1a) and b), respectively.

Of the functionalities, most noticeable were:

- Iteration 2: definition of notification request and response regarding the Subscription and Notification (S&N) pattern;

- Evidence request, DE/DO mocks;

- Average grade element was added to the diploma scheme, due to requirements at the Universitat Jaume I (UJI) to rank applicants;

- Automatic confirmation of messages;

- Canonical data models: additional information to generate other types of evidence;

- Capacity to differentiate between "environments" (mock, preproduction and piloting) in the information returned by the IAL, since in the second iteration we had just one playground for all the environments; and

- Deregistration, multi evidence.

Of the new requirements[1], the following were noted:

- Those necessary to define and implement the above functionalities;

- SSI authority agent and SSI mobile agent were updated to simplify the SA UC3 ("Diploma/Certs/Studies/Professional Recognition") service;

- Subscription and Notification pattern, Evidence Request, DE/DO mocks (see project’s wiki solution architecture iteration 2, for requirements regarding Subscription and Notification); and

- Deregistration.

In addition to the new functionalities, we also inquired about the redundant ones, those that were already defined, but found unusable in the context of DE4A. Thus, in 4 of the (10) cases there were redundant functionalities found for the implementation of the pilot.

Despite the existing BBs that were on the list for evaluation, 4 of the partners also pointed out the following additional BBs or components that were employed in the pilots:

- Logs and error messages (in the MA-pilot);

- IAL / IDK (used by the Member States); and

- eIDAS (used by the SA pilot and the MSs).

With their use, the following additional functionalities were provided:

- Cross-border identification of students;

- Clarity and understanding; and

- Authentication and lookup routing information.

For the additional BBs, one new functionality was also defined during piloting: Common Errors.

Finally, the survey also inquired on the possibility of completely new BBs being defined during the lifespan of the project. According to the partners’ feedback, in 4 of the 10 cases such BBs were also defined, all of which were also regarded as reusable by other projects and in other cases as well, such as:

- For any SSI or verifiable credential like pattern;

- MOR semantics in EBSI and SDGR; and

- By IAL: for lookup routing information.

BB Interoperability

EIF/EIRA defines 4 dimensions with respect to interoperability: Legal, Organizational, Semantic and Technical. Thus, in addition to analyzing the contribution of each BB to interoperability (as defined by EIF/EIRA), we also delved into more granular inquiry on how each of the interoperability dimensions was addressed in the DE4A project (with the implementation of the given set of BBs). This was done both for the cases of the current implementation of the BBs, as well as in view of the potential of each BB to contribute to (the dimensions of) interoperability in contexts other than DE4A.

Figure 2a) shows the contribution of each BB to interoperability in the context of DE4A, while Figure 2b) granulizes this contribution per each interoperability dimension.

Furthermore, Figure 3a) provides insight into the potential of each BB to contribute to interoperability in contexts other than DE4A, while Figure 3b) depicts the contribution of the analyzed BBs per each interoperability dimension, in general. It is important to note that this latter analysis (of the BBs potential) also takes into account the additional functionalities of the BBs defined during DE4A lifespan, through their piloting, and with the updated set of requirements. Thus, these figures also provide a proof of the DE4A contribution in the improvement of BB potential to respond to a wider set of interoperability requirements along each of the four dimensions: Legal, Organization, Semantic and Technical.

Finally, as part of the analysis of BB interoperability, the survey asked the relevant partners to indicate if a BB could help enable other technologies or standards. In that sense, the DE4A-VC pattern makes use of a Wallet, which could be an enabler for the EUDI Wallet. Moreover, it was also denoted as having the potential for reuse in order to enable take up of EBSI. Furthermore, the DBA pilot makes use of SEMPER, which could facilitate the adoption and spreading of SEMPER as a standard for authentication and powers/mandates. Finally, the CompanyEvidence model could be used as evidence standard with implementation of SDG OOTS.

BB Maturity

In the first phase of the BB assessment, domain experts provided qualitative assessment of the maturity of the BB along the following dimensions:

1. Technical

2. Administrative

3. Operational

The aim of this initial feedback was to provide the grounds for a comparative analysis over the same dimensions, after the current (second) phase of the BB assessment (i.e. check if anything changed meanwhile).

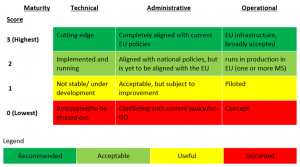

The following scale was used to provide input on the level of maturity of the given set of BBs by each relevant partner: 0 - Discarded; 1 - Useful, 2 - Acceptable, 3 - Recommended). This is the identical scale employed for the assessment of the BBs in the first phase. As a reference, the semantics behind these scores is also again given here on Figure 4 below.

However, there is one important aspect on which the BB assessment in this phase differs from the previous. Whereas previously we obtained a single evaluation score to determine the general maturity of a BB, in the current iteration, the 13 BBs were evaluated for each type of maturity (Technical, Administrative, Operational). This is actually fine-tuning of the initial methodology, which appeared insufficiently granular to provide correct output. Due to this lack of granularity and the inability to capture some contextual specificities, in the first phase the SEMPER building block was denoted as Immature, but was still Recommended for use in the DE4A.

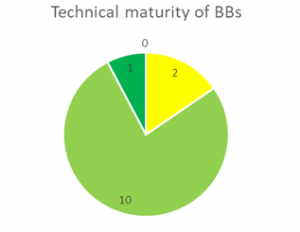

As Figures 5. a,b and c below show, none of the 13 BBs analyzed in this phase was Discarded for future implementation and reuse. The highest level of maturity was achieved Administrative context, where almost half of the BBs are considered to be completely aligned with current EU policies and only one BB (IAL) is denoted as unstable. From a technical maturity aspect, most of the BBs are implemented and running, while 2 (IAL and MOR) are still unstable and under development. Similar is the case with operational maturity, where 3 BBs are deemed as unstable: SMP/SML[1] , IAL and MOR. Hence, it is reasonable to pinpoint IAL as the least mature BB, which is however not to be discarded for reuse and re-uptake by other projects.

Figure 6 provides insight into the general state of maturity of each of the BBs, showing that each of the 13 building blocks integrates all aspects of maturity in its overall maturity. Furthermore, the figure also makes it evident that some of the BBs are more advanced in one aspect and less in others. For instance, the DE4A Playground demonstrates higher technical maturity, whereas its operational maturity has been reached to a lesser extent. Similarly, MOR’s maturity is mainly in administrative sense, and to a lesser extent in the technical and operational sense.

BB Openness

In this section, we analyzed the openness of each of the 13 BBs along several dimensions, i.e. properties: Extensibility, Customizability and Modularity. As Figure 7 shows, the most prevalent of all is Extensibility, present in most of the BB except the SSI User and Authority agent. Most of the BBs lend themselves to Customization, and more than half are also modular.

It is important to note, however, that 75% of the partners stated that the implementation, i.e. the use of some of the BBs is either technology, platform or solution dependent. For instance, IEM is DE4A specific, while CompanyData model is usable beyond DE4A. Furthermore, many components depend on a one-man company, introducing risks in terms of support and maintenance. Similarly, EBSI and federated services were denoted as solution/platform dependent, especially in the context of mixed environments .

Finally, half of the BBs were also marked as sector-specific. Thus, some are only relevant for public administrations, others for certain education environments (e.g., canonical data models in the SA pilot are specific to higher education), and some - for the business domain.

| # | Building Block | Ease of deployment | Ease of configuration | Ease of integration with other BBs/existing SW | Barriers for implementation |

| 1 | eDelivery (data exchange) | It is embedded into the Connector;

Easy |

3/5;

Tricky (certificates) |

3/5;

Easy |

Certificates;

Support by one-man company |

| 2 | SMP/SML | No

Easy |

No;

Difficult |

No

Easy |

Certificates;

Support by one-man company |

| 3 | DE4A Connector | No

Easy |

No;

Difficult |

Fairly easy integration with data evaluator | No |

| 4 | DE4A Playground | N/A | N/A | Fairly easy integration with data evaluator | No |

| 5 | Information Exchange Model | Yes | 4/5;

Yes |

4/5, yes, Transparent to data evaluators | No |

| 6 | Canonical Data Models | Fairly easy | yes | CompanyEvidence was easy to use with integration with BusReg;

Fairly easy integration with data evaluator |

No |

| 7 | ESL (implemented as part of SMP/SML) | 5/5 | 3/5 | 2/5 | No |

| 8 | IAL | 5/5 | 2/5 | 2/5 | Yes. Business cards from TOOP were reused, whose data model did not completely meet DE4A needs for the IAL functionality. However, a working solution was provided |

| 9 | MOR | N/A | N/A | N/A | N/A |

| 10 | SEMPER | Normal | Normal | Normal | No |

| 11 | SSI Authority agent | Yes | Yes | Yes | No |

| 12 | SSI User agent (mobile) | Yes | yes | Yes | No |

| 13 | EBSI-ESSIF (CEF Blockchain) | Normal | Normal | Normal | No |

As a result of these experiences, practical recommendations for use and implementation of the BBs are also provided (see Table 4 below), together with pointers to some of the successful uses in the context of DE4A.

| # | Building Block | Practical recommendation for use | Used in: |

| 1 | eDelivery (data exchange) | To make certificate management easier | Used by multiple Interaction Patterns (IM, USI, S&N, L, DReg?);

Can be considered common infrastructure |

| 2 | SMP/SML | To make certificate management easier | Part of eDelivery (idem) |

| 3 | DE4A Connector | The concept of a Connector with an integrated AS4 gateway allowed for easier integration of DEs and DOs in the USI pattern. Decoupling the exchange and business layers allows for abstraction and adds flexibility to the exchange model. The DE4A Connector reference implementation helped MS to integrate their data evaluators and data owners and enable connectivity between the states more easily;

Make certificate management easier. |

Used for easier integration of data evaluators and data owners |

| 4 | DE4A Playground | The DE4A playground with mock DE, mock DO test connectors and shared test SMP proved successful for validating national connectors and SMP installations and easier DE and DO integration. | Used for easier integration of DEs and DOs |

| 5 | Information Exchange Model | N/A | To fully understand the XSDs; Transparent to pilots |

| 6 | Canonical Data Models | Assess fit for use in SDG OOTS;

Extend with additional attributes/data; It was difficult to find a common denominator of higher education evidence from the three MS for applications to higher education and applications for study grants (some data required by one MS might not be obtainable from another MS). This will become even more difficult when doing the same among all MS. Many MS also have difficulties to provide the evidence required for certain procedures, e.g. non-academic evidence for the applications for study grants that can contain privacy sensitive data. The pilot suggested to the DE4A Semantic Interoperability Solutions (WP3) the Europass data model as the basis for the higher education diploma scheme in order to be able to use the same schema for both USI and VC pattern (and thus between SDG OOTS and revised eIDAS regulation). |

Used for canonical evidence exchange |

| 7 | ESL (implemented as part of SMP/SML) | Documented in the proper guidelines[1] | Extension of SMP/SML, i.e. business cards |

| 8 | IAL | Documented in the proper guidelines | Conceptually part of IDK, central component providing routing information |

Meeting pilot requirements

After BBs piloting implementation, we asked pilot partners to also document their experience in terms of BBs meeting the initial requirements for the particular piloting context. These experiences are listed in Table 5, which shows that most of the piloting requirements were met, although for some of the BBs new functionalities were provided (as discussed in the first subsection of this analysis) in order to achieve the piloting objectives.

| # | Building Block | Piloting requirements that were met | Requirements that were not met |

| 1 | eDelivery (data exchange) | Message exchange;

All |

None |

| 2 | SMP/SML | All | None |

| 3 | DE4A Connector | Met (4.75 on a 1-5 scale; see D4.3); All | None |

| 4 | DE4A Playground | N/A | N/A |

| 5 | Information Exchange Model | Include the information to request and send evidence, even multiple evidence;

All; Met (4.5 on a 1-5 scale; see D4.3) |

None |

| 6 | Canonical Data Models | All;

Met (4.5 on a 1-5 scale; see D4.3) |

None;

Missing element was added to the diploma scheme for the final phase |

| 7 | ESL (implemented as part of SMP/SML) | Identify the DO’s evidence service | None |

| 8 | IAL | Identify the evidence DO provider | The data model of the IAL does not support all the information on Administrative Territorial Units designed by WP3 |

| 9 | MOR | N/A | N/A |

| 10 | SEMPER | N/A | N/A |

| 11 | SSI Authority agent | Met (4 on a 1-5 scale; see D4.3) | None |

| 12 | SSI User agent (mobile) | Met (4.5 on a 1-5 scale; see D4.3) | None |

| 13 | EBSI-ESSIF (CEF Blockchain) | N/A | N/A |

As the barriers to meeting piloting requirements and smooth implementation may be of different nature, we have analyzed them in a finer granularity. This systematization of barriers has been rationed and defined in WP1, and can be found in Deliverable 1.8 – “Updated legal, technical, cultural and managerial risks and barriers”. Table 6, provides the partners experiences in terms of implementation barriers, describing them in the context in which they were encountered.

| Type of barrier | Description of barrier |

| Legal | All MSs need to accept DLT;

How to authorize a DE to request a canonical evidence type about a specific subject. |

| Organizational | Hire/Engage internal staff;

The collection of signed information from partners to create the first PKI with Telesec, which took a lot of time and was very burdensome; Manual trust management, horizontal trust model |

| Technical | Invest in EBSI infrastructure;

Allow federated until 2027; The need of knowing different external technologies and protocols in a very short time, such as eDelivery; maintainability |

| Semantic | Terms get antiquated need synonym hoover text; No major issues;

Lack of canonical models at semantic level, lack of official pairings from local models to canonical models so translation can be done for each pair of MS |

| Business | Allow for private service providers to improve Usability UI;

No major issues related to BBs. |

| Political | AI and ML in reuse of the Data;

The obligation of using RegRep.; Following global standards |

| Human factor | Too little resources to SW dev;

The availability of the personnel assigned to DE4A, which more often than not has not been the expected one. Additionally, the withdrawal of some partners (such as eGovlab); usability. |

Figure 8 further details the level of criticality of the listed barrier in the context of DE4A.

All types of barriers have had elements of high criticality to be addressed, although the technical barriers had the highest criticality during implementation. These were mainly related to the need of knowing different external technologies and protocols in a very short time, such as in the case of eDelivery, but also to maintainability and sustainability of certain regulatory requirements related to technical implementation. High accent for all types of barriers was put on the time-frames needed to meet certain requirements, which were often in discord with the technical readiness of the current infrastructure and the human ability to respond to the required changes. Finally, the amount of available resources was present in all barriers - in terms of scarce human resources, technical expertise, administrative procedures, legislative readiness, infrastructure and staff availability, etc.

Performance and Non-Functional Requirements

The same systematization of the types of barriers investigated above is also ascribed to the various aspects (dimensions) of the building blocks through which we can analyze the BBs performance at a more granular level.

In that sense, Figure 9 shows the importance of each of these aspects (legal, organizational, technical, semantic, business, political and human factors) in the context of DE4A. In other words, the responding partners articulated the aspects that appeared as most important in their experience with piloting and implementation. The assessed BBs have mainly performed satisfactory across all dimensions, and even exceeded expectations on some technical and semantic traits. However, there is a (small) subset of traits across most dimensions on which the BBs also underperformed. These mainly refer to the barriers and the unmet requirements discussed earlier in this analysis. Some of them are also enlisted later in this section.

In terms of overall performance of each building block in the context of DE4A, Figure 10 shows that the only BB who was claimed as Underperforming is IAL, which we also pin-pointed in the maturity analysis as well, in each of the Technical, Administrative and Operational aspects.

Although there are no specific non-functional requirements (NFRs) with respect to performance, availability and scalability, and no performance tests were performed for DE4A, the idea of this section is to get a perception of the partners’ experience with non-functional requirements in the context of DE4A. Table 1 provides an overview of the partners’ claims.

| Type of NFR issue | Encountered | Foreseen |

| Performance | Response times and uptime for some components;

|

Connectors and SMP/SML can become single points of problems if having performance issues in the future when many DEs and DOs join;

|

| Availability | Yes, due to software and configuration issues;

|

Yes, including external services |

| Scalability | Yes. Components not designed for high-availability deployments, nor for flexible escalation. | Depending on use of components, support and development may depend on a one-man company. |

Patterns

Some of the building blocks are used only for specific interaction patterns, while others span multiple patterns. In this section, we analyze the correlation between DE4A patterns and each of the 13 BBs, per pilot. As Figure 11 In terms of overall performance of each building block in the context of DE4A, shows, the most employed is the User Supported Intermediation, closely followed by Verifiable Credential, whereas the least used are the Push and Business patterns. However, this does not imply that these patterns are less useful, but only that they were exploited to a lesser extent in the DE4A depending on the pilots’ needs. For more documentation and guidelines on when and how each of these patterns can be used and implemented, please see the relevant pilot wiki.

S&N and LKP are used in DBA but should also be applicable to non-business context

The use of each of these patterns per building block is shown in Figure 12.

Trust, Identity, Security, Privacy, Protection

There are various trust models in use in DE4A, depending on the interaction patterns used. All those patterns come with specifics related to authentication/establishing identity, security controls and possible privacy issues. This applies to both data at rest/in transfer. Figure 13a) shows the nature and the amount of the specific security/privacy issues encountered during implementation.

Most of the issues are related to security and are introduced as a result of record matching and difficulties with certificates. The small amount of privacy issues is related to data protection, and mainly refer to the usage of eDelivery and 4 corner model during piloting.

The issues stated as being introduced by the use of the BB (on Figure 13b)) mainly refer to availability and connectivity and are related to the use of certificates. However, these issues are not due to the BB nature or the lack of some functionality, but a result of the absence of improvements that were needed to meet the implementation requirements. Thus, this does not affect the reuse aspect of any of the assessed BBs.

In order to analyze in a greater depth, the security issues encountered, the survey inquired on the security mechanisms available to handle and address such issues in terms of confidentiality, integrity and availability, as well as the BB features used for that purpose. The cases in which concrete BB features were employed to address security issues are presented in the charts of Figure 14, a), b) and c).

More concretely, information confidentiality mechanisms used within the pilots were: eIDAS standard mechanisms, encryption and verification, passwords and security tokens.

Information integrity mechanisms used within the pilots were: hashing, access control and digital signatures.

Finally, information availability mechanisms used within the pilots were: cloud server deployment, redundancy, firewalls, and DDoS attacks' prevention.

In most (80%) of the cases, the security mechanisms were used to counter specific cyber threats (Figure 15a)). In half of these, the vulnerabilities to a cyber-attack were introduced by the employed BBs (or their features) (Figure 15b)).

Features claimed to introduce such vulnerabilities are some libraries, and only at a certain point of the piloting (such as log4j and the spring framework). However, these vulnerabilities were detected and fixed on time. Other vulnerabilities that were also met and addressed were DoS and increased risk of data hijacking. To address these vulnerabilities, adequate countermeasures have been employed, such as: access control, channel encryption, authentication and digital signatures. For example, all communications have been secured via the use of certificates, so that the communications are encrypted. In addition, the components of the playground have been deployed using redundancy.